April 20, 2020

Douglas Magyari

CEO/CTO

IMMY Inc., Troy, Michigan, U.S.A.

Augmented Reality and Virtual Reality glasses typically cause eyestrain, eye fatigue, and nausea if worn for more than a few minutes. The reasons for this discomfort have been well researched and documented, [1, 2], but effective solutions remain elusive. The problem, commonly referred to as the Vergence Accommodation Conflict (VAC) is an optically induced problem caused by lens-based optics. Humans do not experience the VAC when looking at the real world. The VAC is the disparity between the fixed focal distance of lens-based optic systems and the generated stereoscopic imagery.

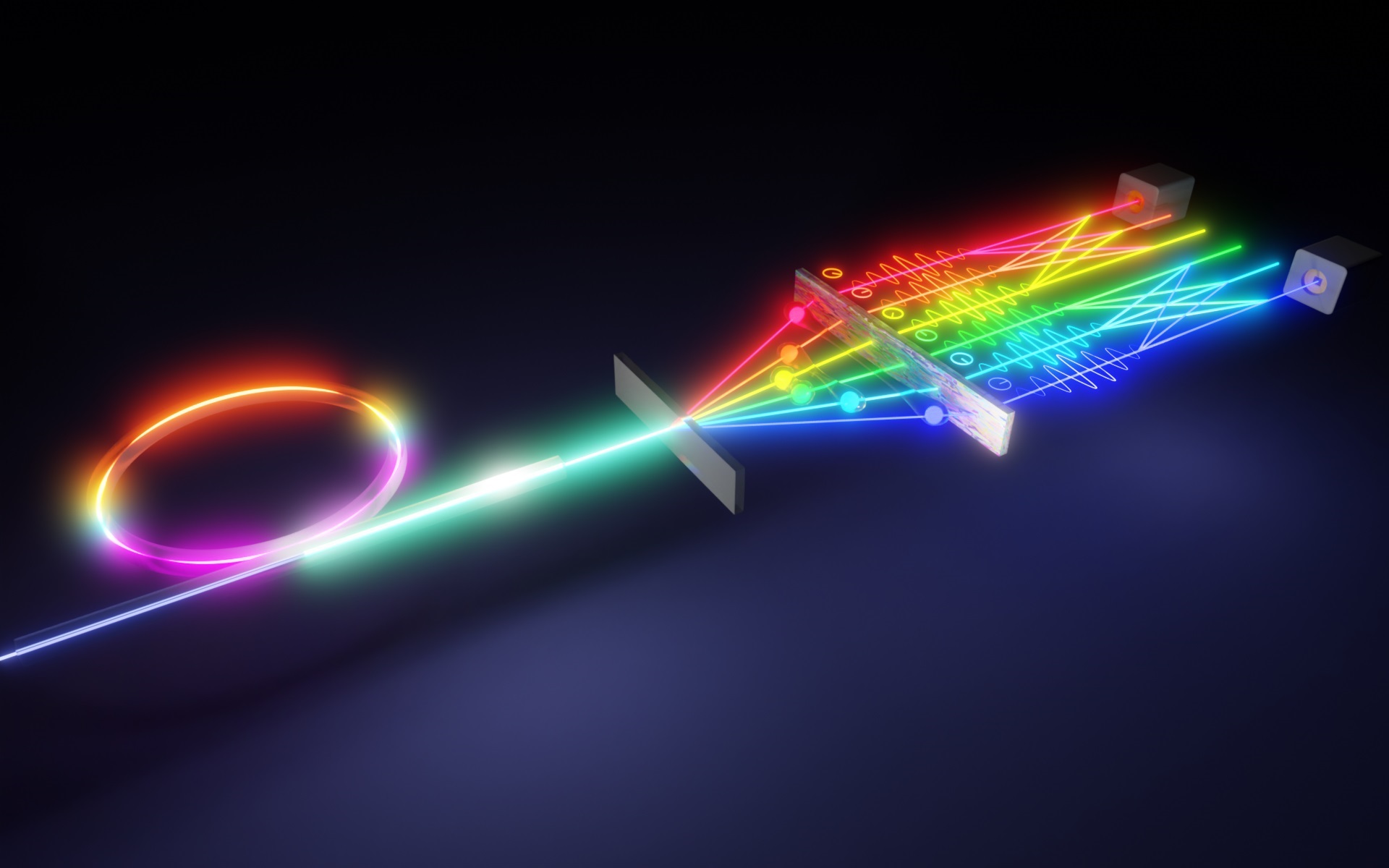

Lens-based optics produce planar wavefronts, which strip away the necessary mono and stereo cues for proper depth perception. The purpose of this paper is to challenge the existing paradigm of using Lens-Based optical systems and to provide an alternative approach that uses multiple, curved Mirror-Based optics to generate volumetric imagery, which generate freeform wavefronts. This volumetric optical imaging technique eliminates the vergence/accommodation conflict (VAC).

Almost all Near-To-Eye (NTE) systems today use some form of lenses to create their imagery. They are designed to see the world as a camera-lens sees the world, by focusing to an image plane which is the opposite of a volume. In the real world, there is no such thing as an image plane; this is a manmade contrivance and is the biggest limiting factor with current AR/VR/MR/XR hardware optical strategy.

It is the fixed distance, planar imaging that causes the vergence/accommodation conflict, (VAC) eye strain and eye fatigue. Lens-based optics can be thought of as compressing a source volumetric image to a flat virtual image. The resulting planar wavefront produces an image that is perceived as unnatural and uncomfortable and is only in focus at one distance. There is no depth of field to the image. It does not matter if the distance of the image plane is moved to different distances; it’s still a planar image with a planar wavefront that causes eyestrain, fatigue and nausea.

Lens-based NTE systems create 3D images by using, two slightly off-set planar images to create a stereoscopic effect. They use the camera frustum to try and set a perceived distance, but a planar wavefront does not contain enough mono and stereo cues to allow the visual cortex to properly perceive and determine depth. This forced 3D vision effect is what causes so many people to be unable to watch 3D content for any length of time without eyestrain or nausea. It is unnecessary and undesirable to try and force vergence, which is not how the human visual system works.

The actual perceived volume of vision comes as a result of each piece of light reaching the eye at different times, coming from many different angles. It is the multi-angular freeform wavefronts and their time differential that creates the volume we see. This rich data provides the necessary mono and stereo cues to perceive depth properly. Time differential is one of the major contributing factors used by the brain’s visual cortex to determine depth. Stereoscopy or parallax is a subcomponent of depth perception. If stereoscopy were the main factor resulting in our volumetric perception when we look at the world around us, everything would collapse into a flat plane if we covered one eye. Our brain is not only using parallax to create a 3D view, but it is also using time to perceive a volume.

The only device known today that relays volumetric imagery without compressing time is a mirror. Since we can only “see” something if light is reflecting off of it, you could say that the whole world is a mirror, it reflects light into our eyes, and the brain’s visual cortex makes a volumetric image out of the data. The other amazing attribute of mirrors is that everything in a mirror is in focus by simply choosing what to look at. There are no “image planes” in a mirror, mirrors relay a volume, and are therefore accommodation invariant, they produce volumetric imagery at every distance.

As an example: when you look in the bathroom mirror to shave or put on makeup, you are in focus even if you are only a few inches away. Or if you look in the rearview mirror of your car at the kids in the backseat, they are in focus. If you decide to look 300 feet behind you, that is also in focus, simply because you decided to focus your attention on something else. This happens because freeform wavefronts are being received by the eyes from all depths and multiple directions continuously.

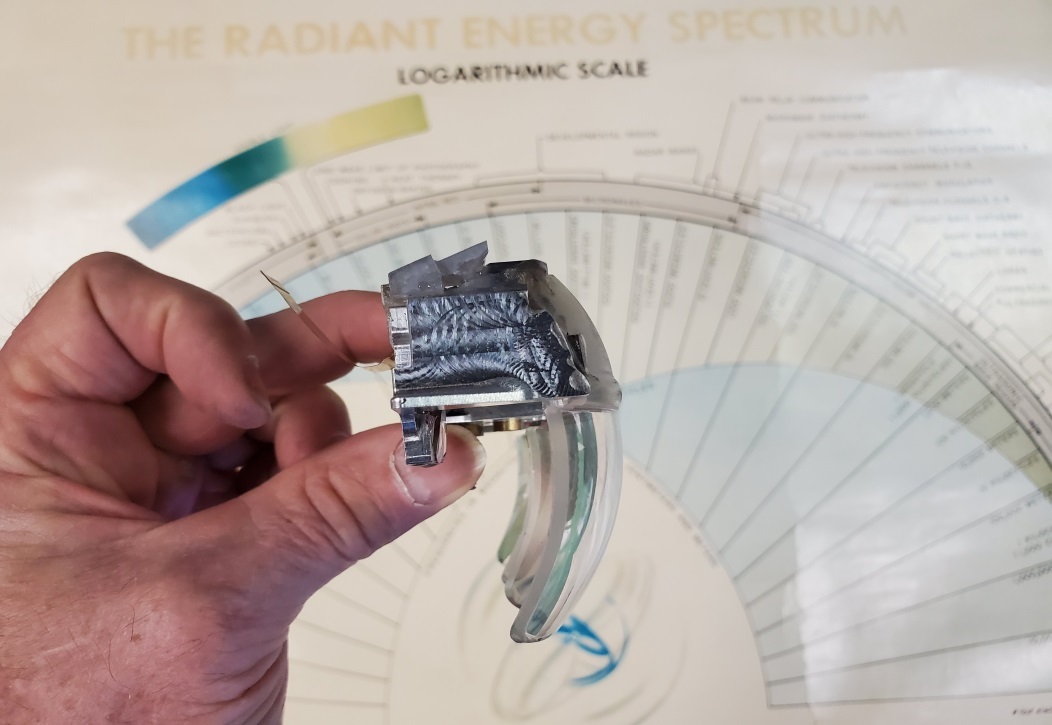

To eliminate the Vergence Accommodation Conflict, a head-mounted display must follow the rules of how humans see the real world, it must create a freeform wavefront volumetric image. This can be accomplished by using three freeform, off-axis compound curved, first surface mirrors. A volumetric mirror optic system produces genuine lightfield characteristics so vergence and accommodation occur naturally, hence the name “Natural Eye Optic”.

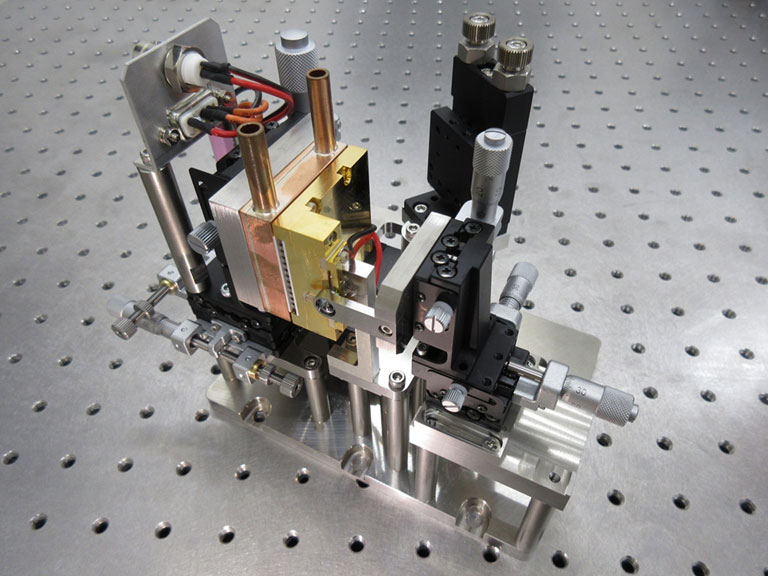

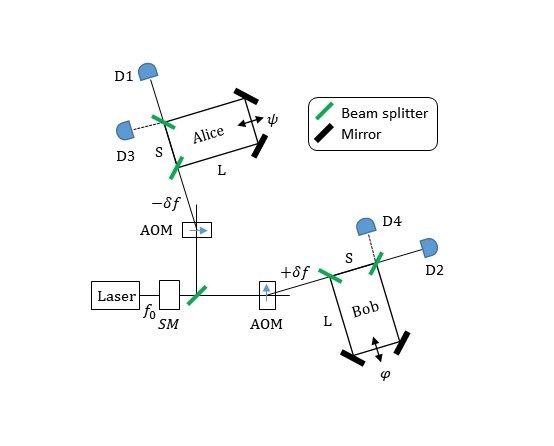

Side View of the Optic Engine

This is how the optic works:

First: It converts the original 2D OLED display light rays into a freeform wavefront. The original Lambertian (hemispherical radiation) distribution of light energy is converted into a volumetric display using a bi-concave freeform mirror [see M1 below). The

hemispherical wavefront enters the bi-concave M1 mirror with a time differential, hitting different locations on the curved surface at different times.

Second: This causes a super-sampling (upscaling) of the original 2D flat display to be “seen” as a volume by the M2 mirror. This provides a true volumetric freeform wavefront being relayed into the magnifying mirror, M2 [see M2 below]. Every point of every pixel from the display is visible at every point on the curved M1 mirror, enabling the entire image volume to be simulcast to the M2, with a complex time signature. This occurs as an off-axis inversion, where the rays from the M1 converge on top of one another on the M2, at approximately a 30:1 reduction in area.

Third: This incredibly complex freeform wavefront is relayed to the M3 (combiner) mirror via the M2 compound convex freeform [see M3 below], creating a true lightfield volumetric image. The M3 is where the ray bundle is unbundled and collimated, relayed directly through the pupil and onto the retina.

In Summary: A freeform wavefront reflects off of the M1, M2 & M3 freeform mirror surfaces to place virtual images at any depth and from multiple viewing angles, allowing the eye to move around freely, absorbing freeform wavefront light energy from many different angles. This natural process of absorbing and processing both mono and stereo cues produces an in-focus image at the proper depth, This addresses the basic needs of Human comfort, Human safety, Eye comfort, and Eye safety. and generates an extremely large Depth of Field, with a very large eyebox.

CROSS-SECTIONAL VIEW OF A FULLY REFLECTIVE NEAR-TO-EYE, MIRROR OPTIC SYSTEM

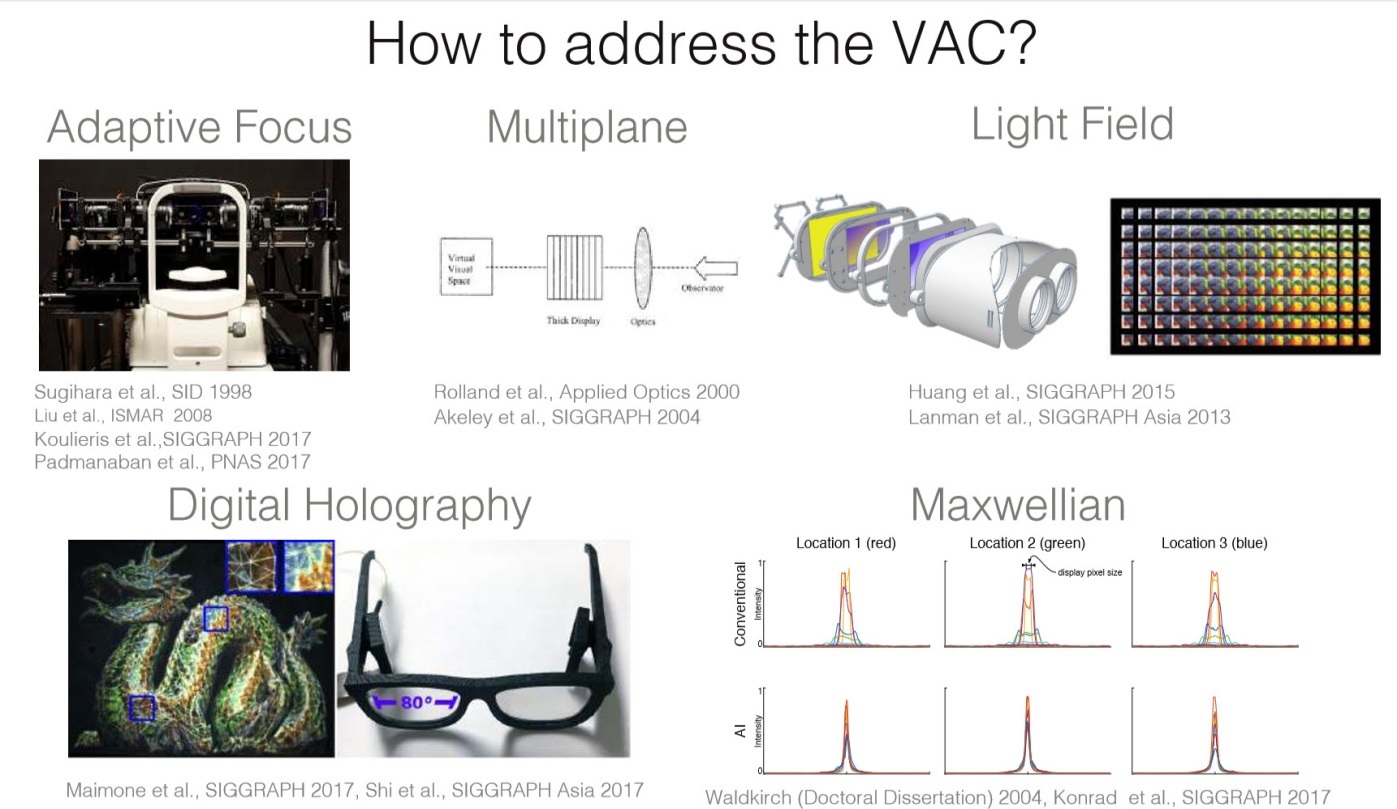

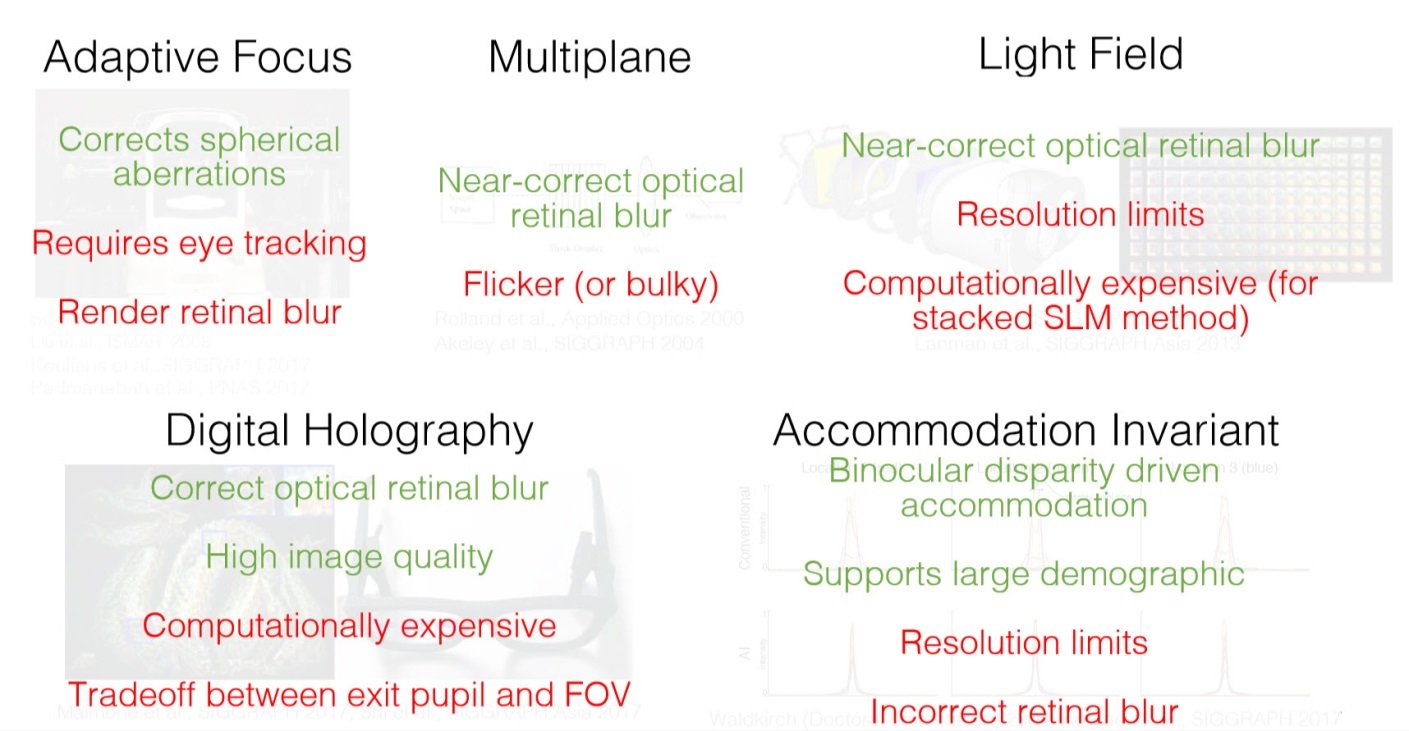

There have been many attempts to correct this lens induced VAC problem. Below are several examples of attempts to solve the VAC published from a SIGGRAPH presentation [3]. Any headset that uses lenses will thus suffer from VAC and will require the implementation of some form of correction. These correction methods are computationally intensive, expensive, and in most cases induce additional eyestrain, human factor issues, weight, and cost.

A lot of work has been conducted with deliberately blurring portions of the image, to try and trick the brain into understanding the depth of an image. Forcing the eye to accommodate with blurring and PSF (point spread function) contrast causes eye strain and does not resolve the real-world disparity issue. In other words, it doesn’t really work. The human vision system is far too complex for this issue to be solved artificially. Perhaps even more importantly, most of these attempted solutions are simply changing the focal distance of the generated planar wavefront, which is not a solution to the actual problem. The real problem is how to get freeform light into the eye, not a planar wavefront.

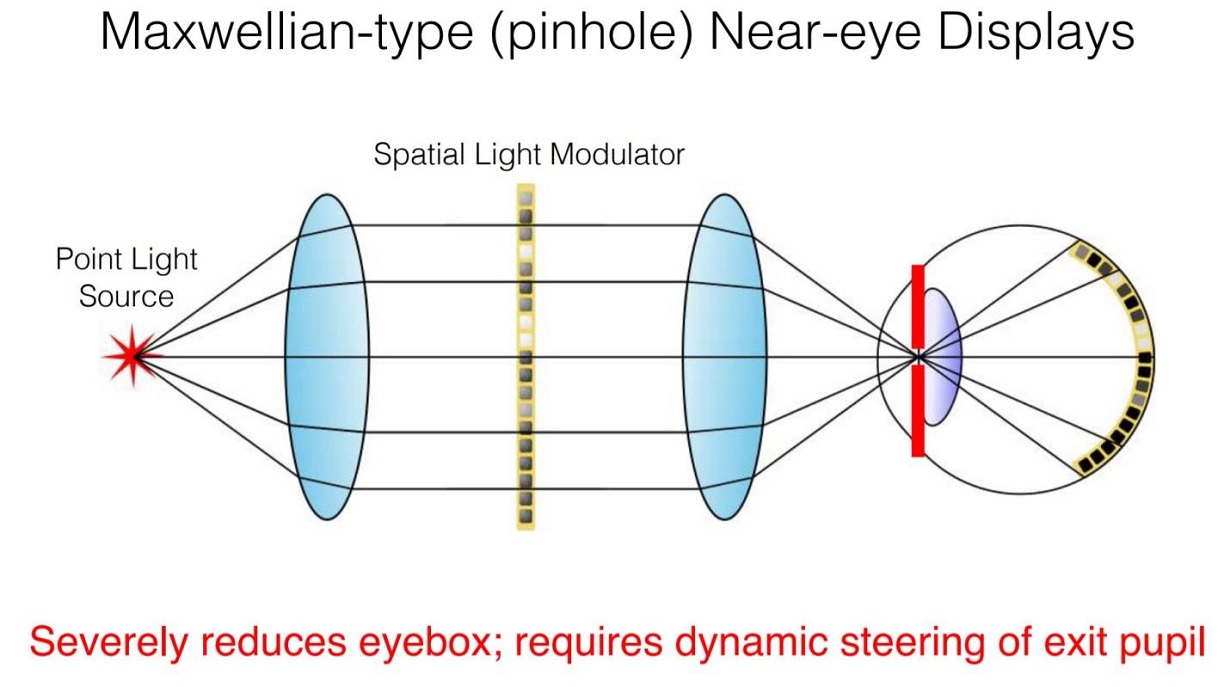

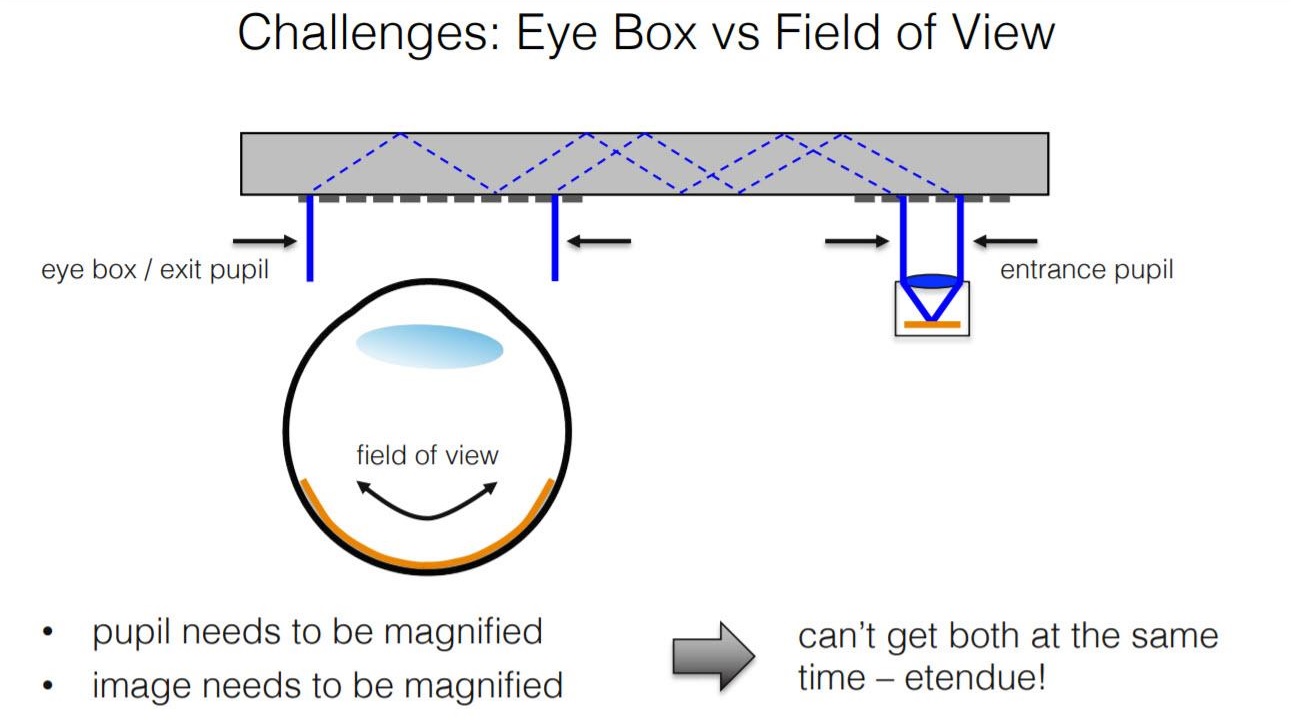

Below are additional slides from the same SIGGRAPH presentation [3] showing the Maxwellian problem and the waveguide problem of a small eyebox.

Conclusion:

After decades of attempts and billions of dollars to produce an economically practical, eye-safe and comfortable AR/VR/MR/XR headset, without satisfactory results, it seems obvious that something is wrong with the lens-based optical approach. Curved mirrors, which generate volumetric imagery provide a practical solution and are a material paradigm shift in how HMD’s should be built. Working in harmony with how the Human vision system operates should be the central theme of HMD design. AR and VR headsets must present as natural and unadulterated freeform wavefront imagery as possible into the eye; the visual cortex does the rest.

Bio:

Doug Magyari,

Doug Magyari, CEO/CTO IMMY Inc.: Inventor, scientist, entrepreneur, and visionary, Doug has spent more than 20 years working with immersive technologies and developing augmented reality (AR) headsets. He has 15 patents in chemistry, optics, acoustics, mechanics, and electronics with additional patents pending. He has started, operated, and sold six different companies.

References:

1. David M Hoffman, Ahna R. Girshick, Kurt Akeley, and Martin S. Banks (2008) “Vergence-accommodation conflicts hinder visual performance and cause visual fatigue” J Vis.; 8(3) 33.1-3330 https://www.ncbi.nlm.nih.gov/pubmed/18484839

2. Gregory Kramida and Amitabh Varshney (2015) IEEE Explore, IEEE.org “Resolving the Vergence_Accommodation Conflict in Head Mounted

Displays”. https://ieeexplore.ieee.org/abstract/document/7226865/authors#authors

3. R. Konrad, N. Padmanaban, K. Molner, E.A. Cooper, G. Wetzstein (2017) “Accommodation-invariant Computational Near-Eye Displays”, ACM

SIGGRAPH (Transactions in Graphics 36,4). http://www.computationalimaging.org/publications/accommodation-invariant-near-eye-displays-siggraph-2017/

For more information on the IMMY Mirror Optic System, and the IMMY Immersive Imaging Glasses visit IMMYinc.com or contact info@immyinc.com