September 9, 2016

Larry Hardesty | MIT News Office

MIT researchers and their colleagues are designing an imaging system that can read closed books.

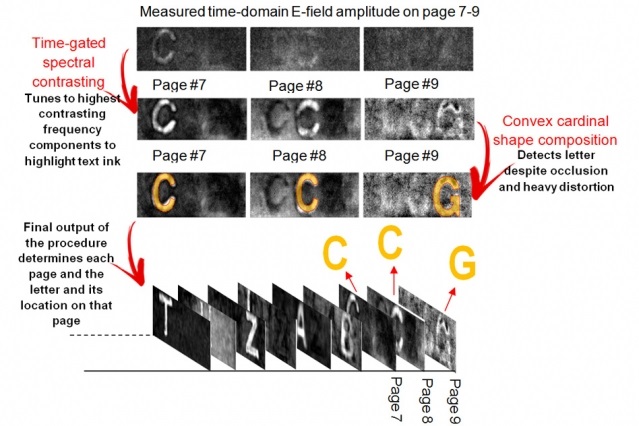

In the latest issue of Nature Communications, the researchers describe a prototype of the system, which they tested on a stack of papers, each with one letter printed on it. The system was able to correctly identify the letters on the top nine sheets.

“The Metropolitan Museum in New York showed a lot of interest in this, because they want to, for example, look into some antique books that they don’t even want to touch,” says Barmak Heshmat, a research scientist at the MIT Media Lab and corresponding author on the new paper. He adds that the system could be used to analyze any materials organized in thin layers, such as coatings on machine parts or pharmaceuticals.

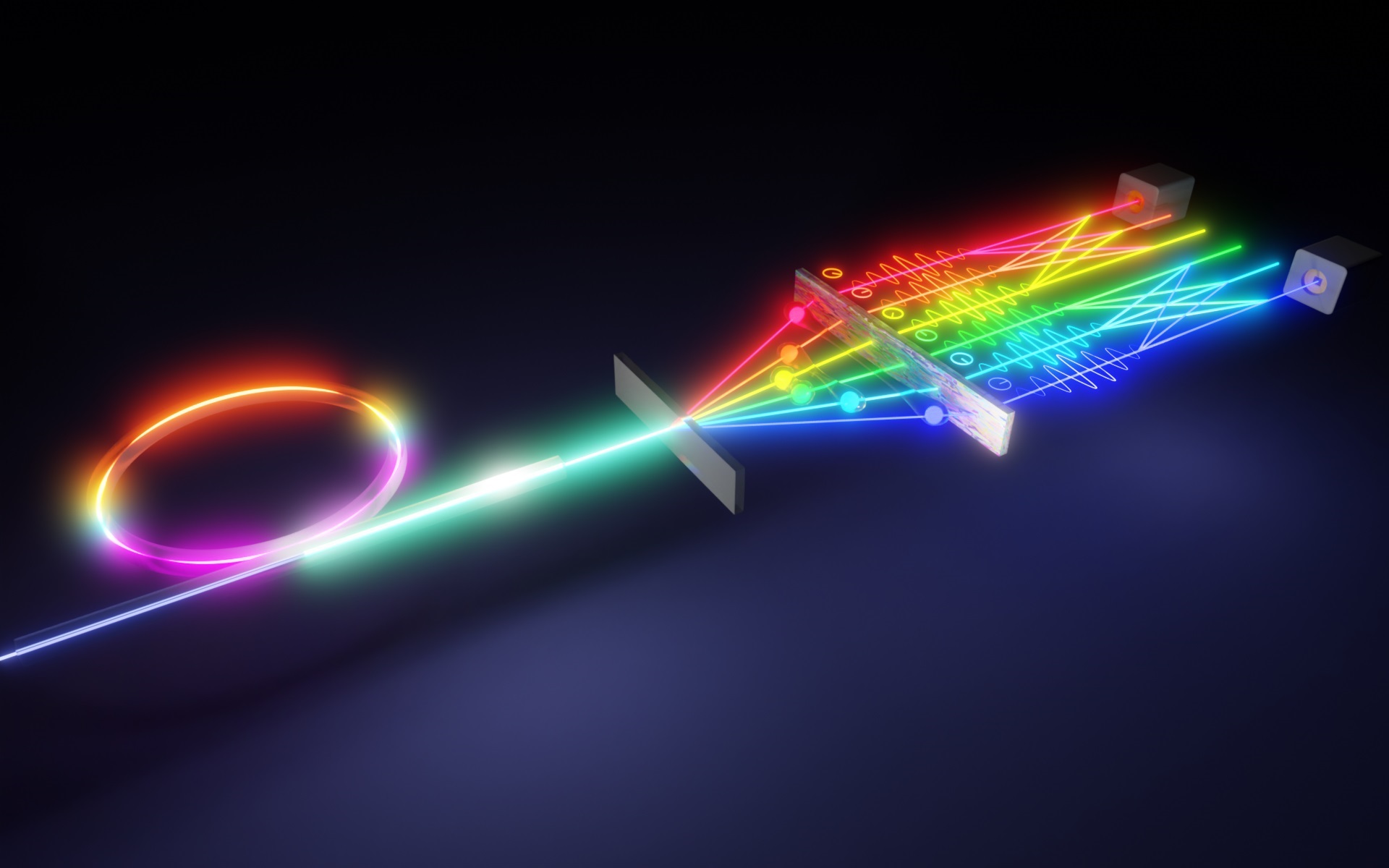

Spatial resolution, spectral contrast, and occlusion are three major bottlenecks in current imaging technologies for non-invasive inspection of complex samples such as closed books. A new study reports successful unsupervised content extraction through a densely layered structure similar to that of a closed book.

Video: MIT Media Lab

Heshmat is joined on the paper by Ramesh Raskar, an associate professor of media arts and sciences; Albert Redo Sanchez, a research specialist in the Camera Culture group at the Media Lab; two of the group’s other members; and by Justin Romberg and Alireza Aghasi of Georgia Tech.

The MIT researchers developed the algorithms that acquire images from individual sheets in stacks of paper, and the Georgia Tech researchers developed the algorithm that interprets the often distorted or incomplete images as individual letters. “It’s actually kind of scary,” Heshmat says of the letter-interpretation algorithm. “A lot of websites have these letter certifications [captchas] to make sure you’re not a robot, and this algorithm can get through a lot of them.”

At the moment, the algorithm can correctly deduce the distance from the camera to the top 20 pages in a stack, but past a depth of nine pages, the energy of the reflected signal is so low that the differences between frequency signatures are swamped by noise.

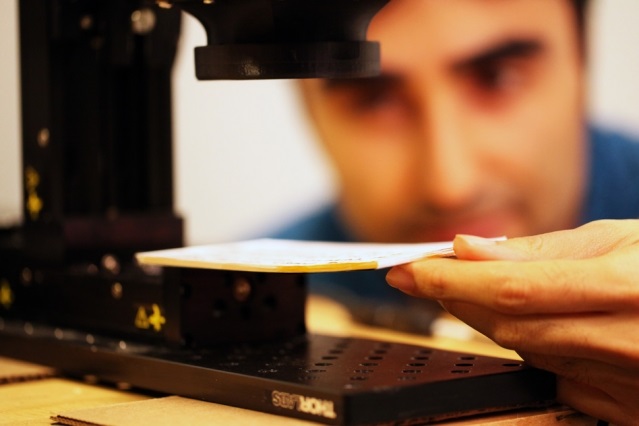

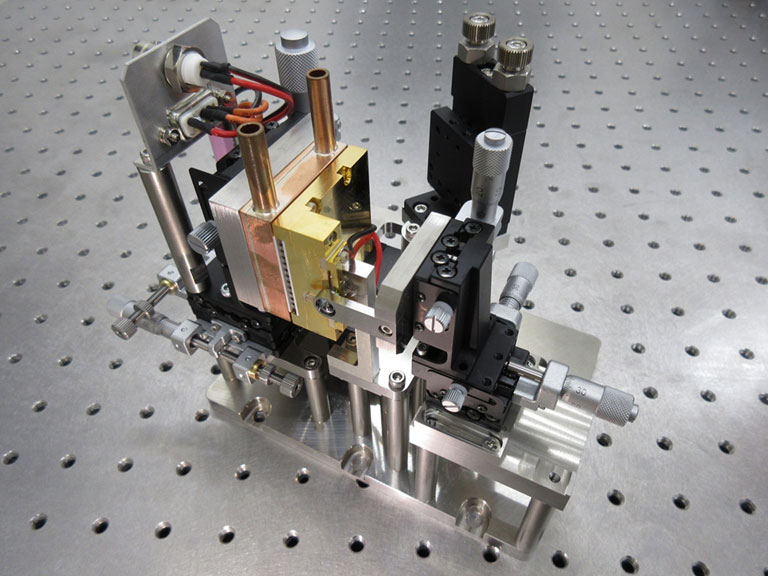

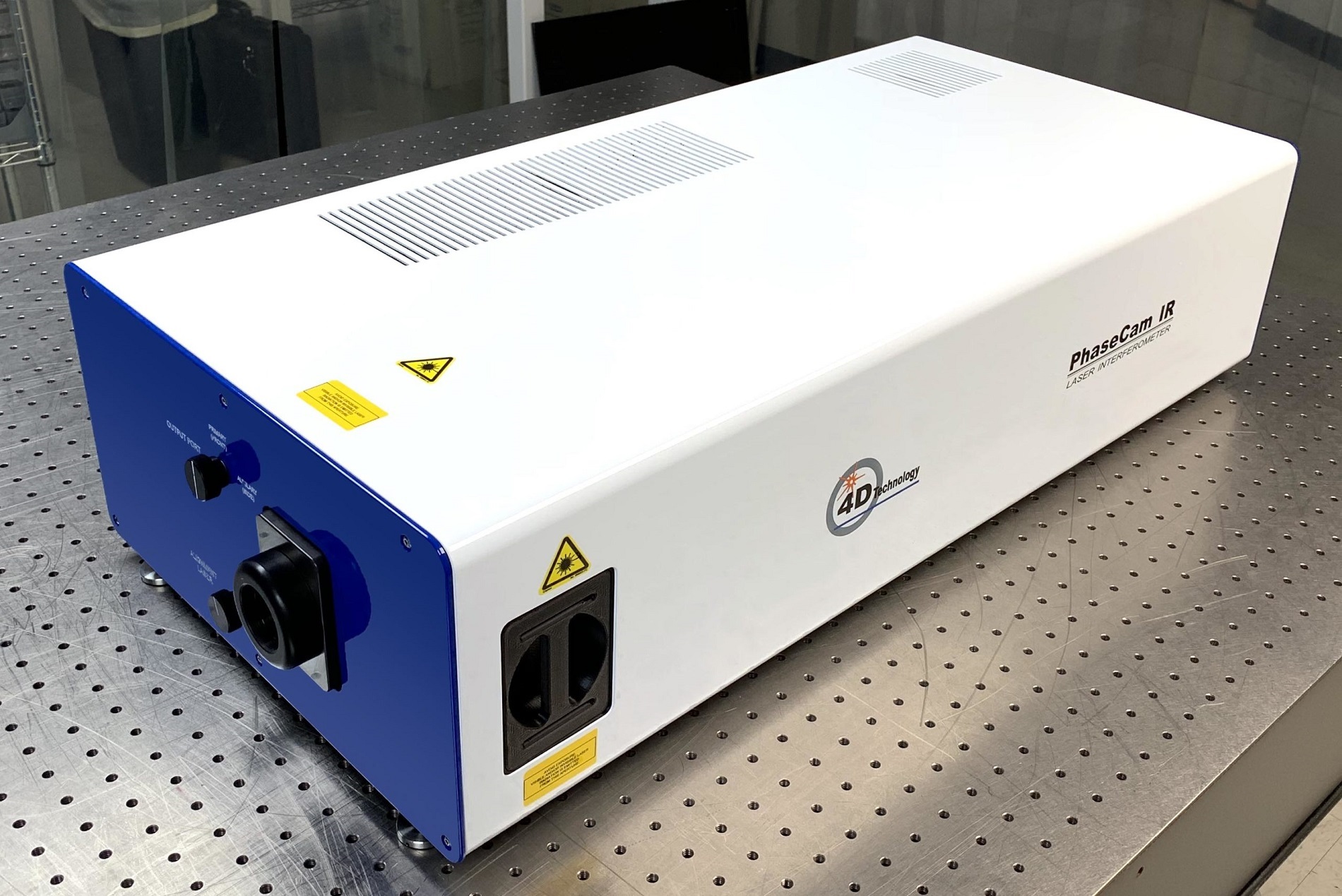

Image courtesy of Barmak Heshmat.

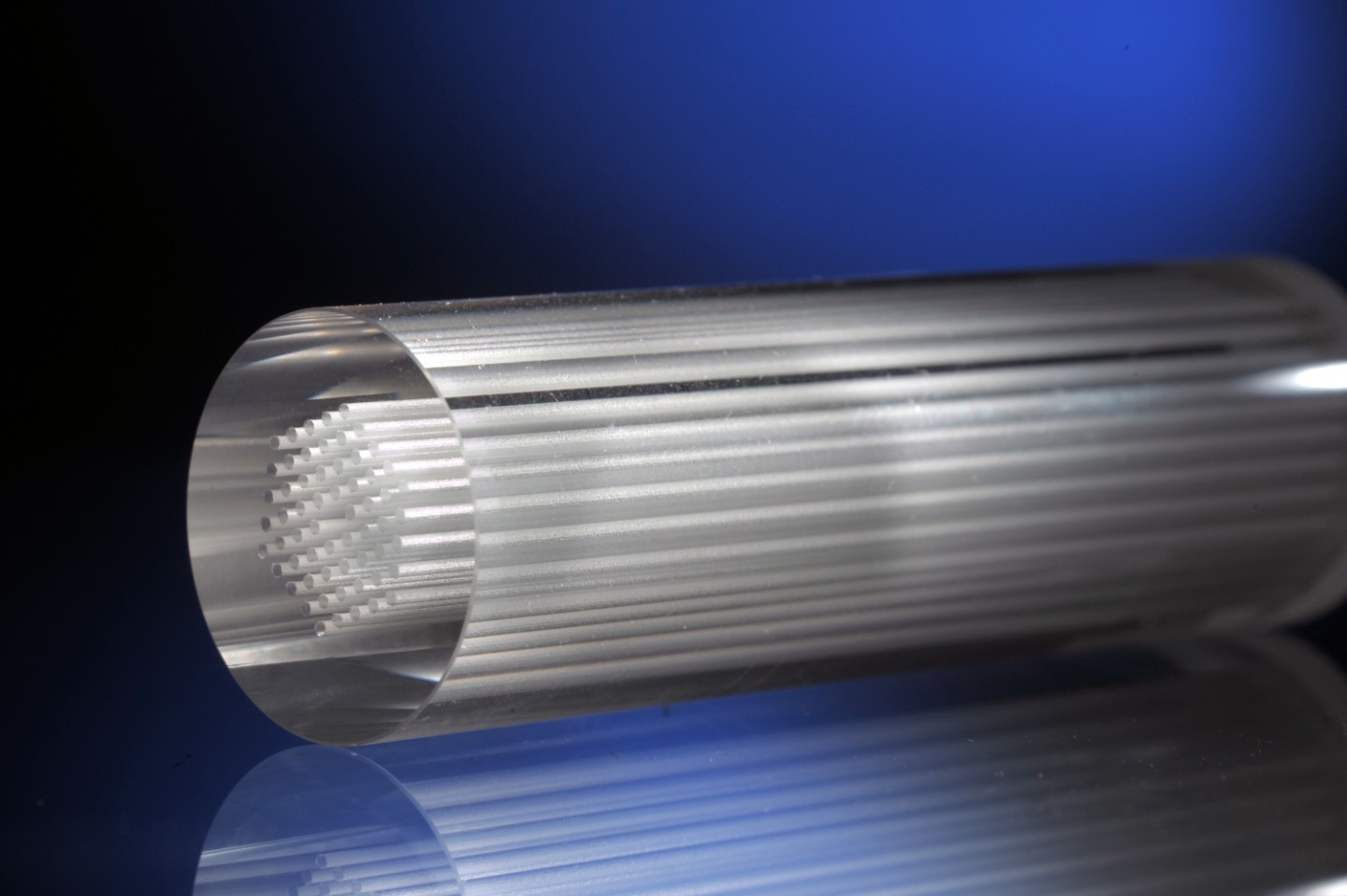

Timing terahertz

The system uses terahertz radiation, the band of electromagnetic radiation between microwaves and infrared light, which has several advantages over other types of waves that can penetrate surfaces, such as X-rays or sound waves. Terahertz radiation has been widely researched for use in security screening, because different chemicals absorb different frequencies of terahertz radiation to different degrees, yielding a distinctive frequency signature for each. By the same token, terahertz frequency profiles can distinguish between ink and blank paper, in a way that X-rays can’t.

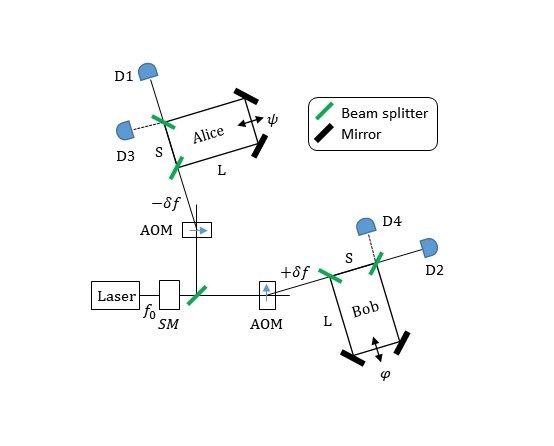

Terahertz radiation can also be emitted in such short bursts that the distance it has traveled can be gauged from the difference between its emission time and the time at which reflected radiation returns to a sensor. That gives it much better depth resolution than ultrasound.

The system exploits the fact that trapped between the pages of a book are tiny air pockets only about 20 micrometers deep. The difference in refractive index — the degree to which they bend light — between the air and the paper means that the boundary between the two will reflect terahertz radiation back to a detector.

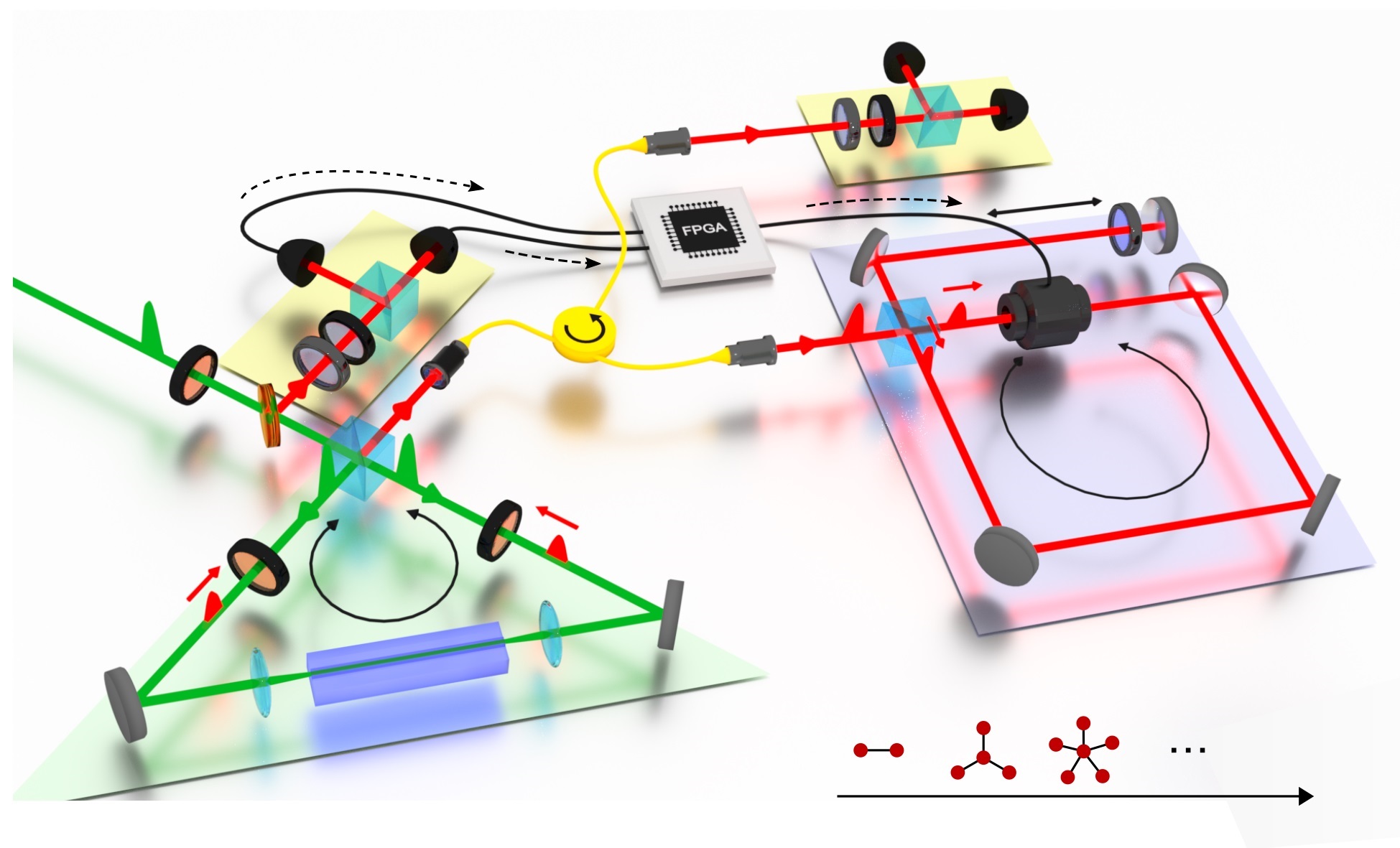

In the researchers’ setup, a standard terahertz camera emits ultrashort bursts of radiation, and the camera’s built-in sensor detects their reflections. From the reflections’ time of arrival, the MIT researchers’ algorithm can gauge the distance to the individual pages of the book.

True signals

While most of the radiation is either absorbed or reflected by the book, some of it bounces around between pages before returning to the sensor, producing a spurious signal. The sensor’s electronics also produce a background hum. One of the tasks of the MIT researchers’ algorithm is to filter out all this “noise.”

The information about the pages’ distance helps: It allows the algorithm to hone in on just the terahertz signals whose arrival times suggest that they are true reflections. Then, it relies on two different measures of the reflections’ energy and assumptions about both the energy profiles of true reflections and the statistics of noise to extract information about the chemical properties of the reflecting surfaces.

At the moment, the algorithm can correctly deduce the distance from the camera to the top 20 pages in a stack, but past a depth of nine pages, the energy of the reflected signal is so low that the differences between frequency signatures are swamped by noise. Terahertz imaging is still a relatively young technology, however, and researchers are constantly working to improve both the accuracy of detectors and the power of the radiation sources, so deeper penetration should be possible.

"So much work has gone into terahertz technology to get the sources and detectors working, with big promises for imaging new and exciting things,” says Laura Waller, an associate professor of electrical engineering and computer science at the University of California at Berkeley. “This work is one of the first to use these new tools along with advances in computational imaging to get at pictures of things we could never see with optical technologies. Now we can judge a book through its cover!"