February 12, 2018

For photographers and scientists, lenses are lifesavers. They reflect and refract light, making possible the imaging systems that drive discovery through the microscope and preserve history through cameras.

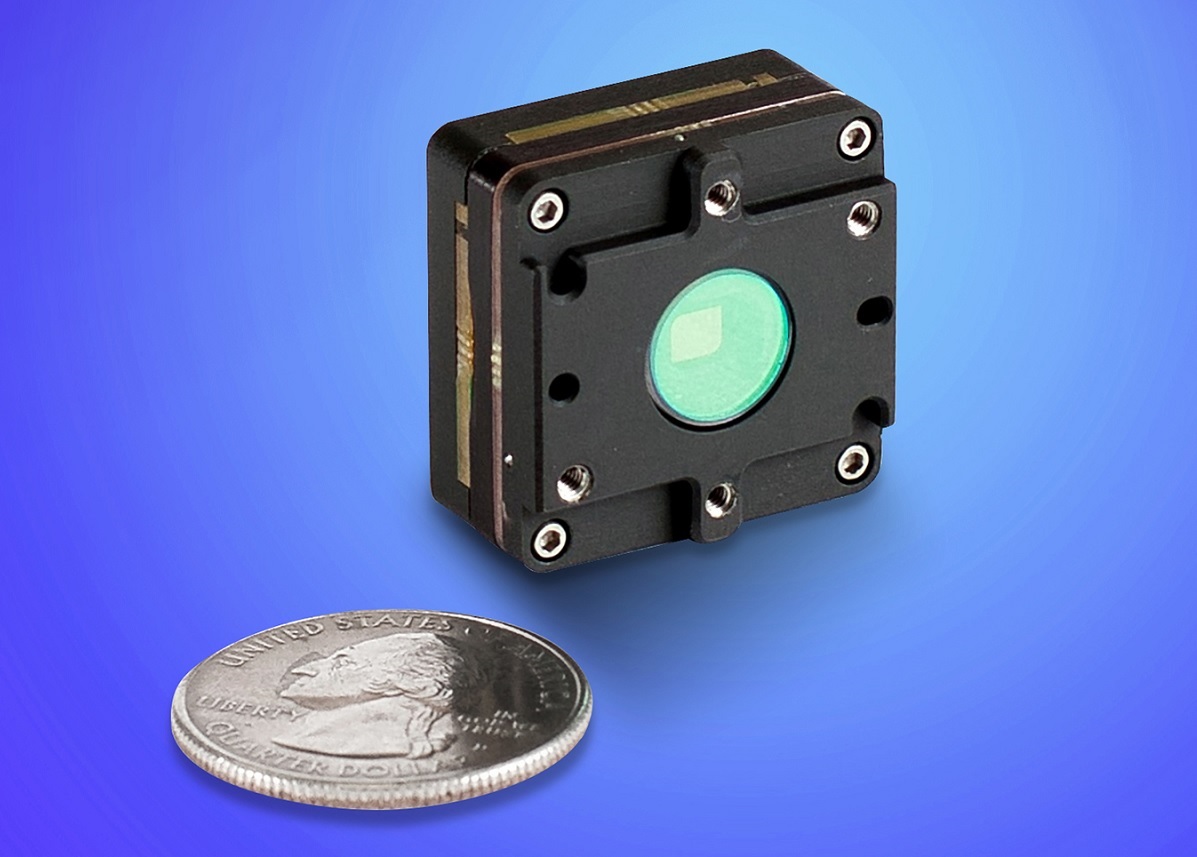

But today’s glass-based lenses are bulky and resist miniaturization. Next-generation technologies, such as ultrathin cameras or tiny microscopes, require lenses made of a new array of materials.

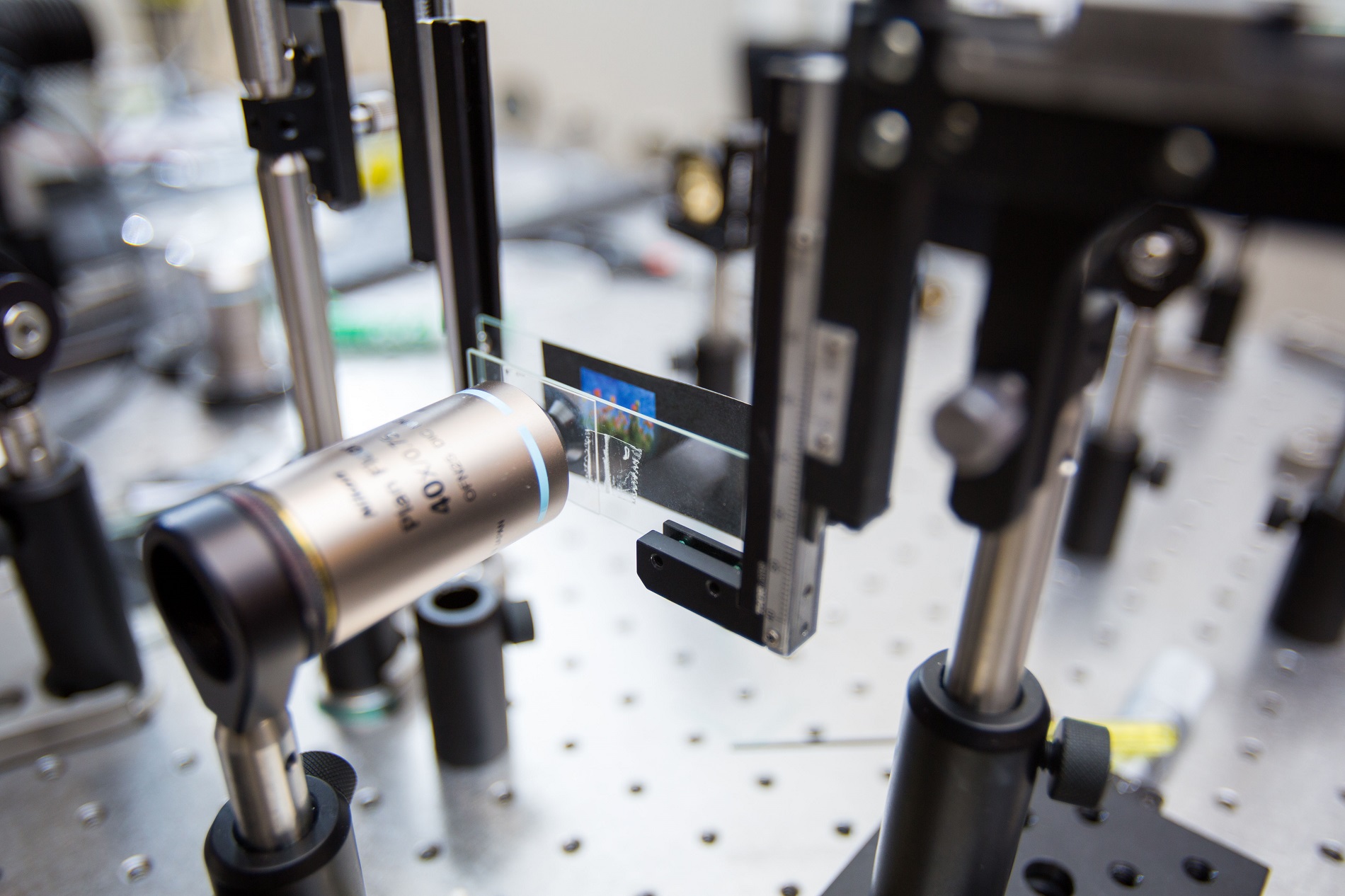

In a paper published Feb. 9 in Science Advances, scientists at the University of Washington announced that they have successfully combined two different imaging methods — a type of lens designed for nanoscale interaction with lightwaves, along with robust computational processing — to create full-color images.

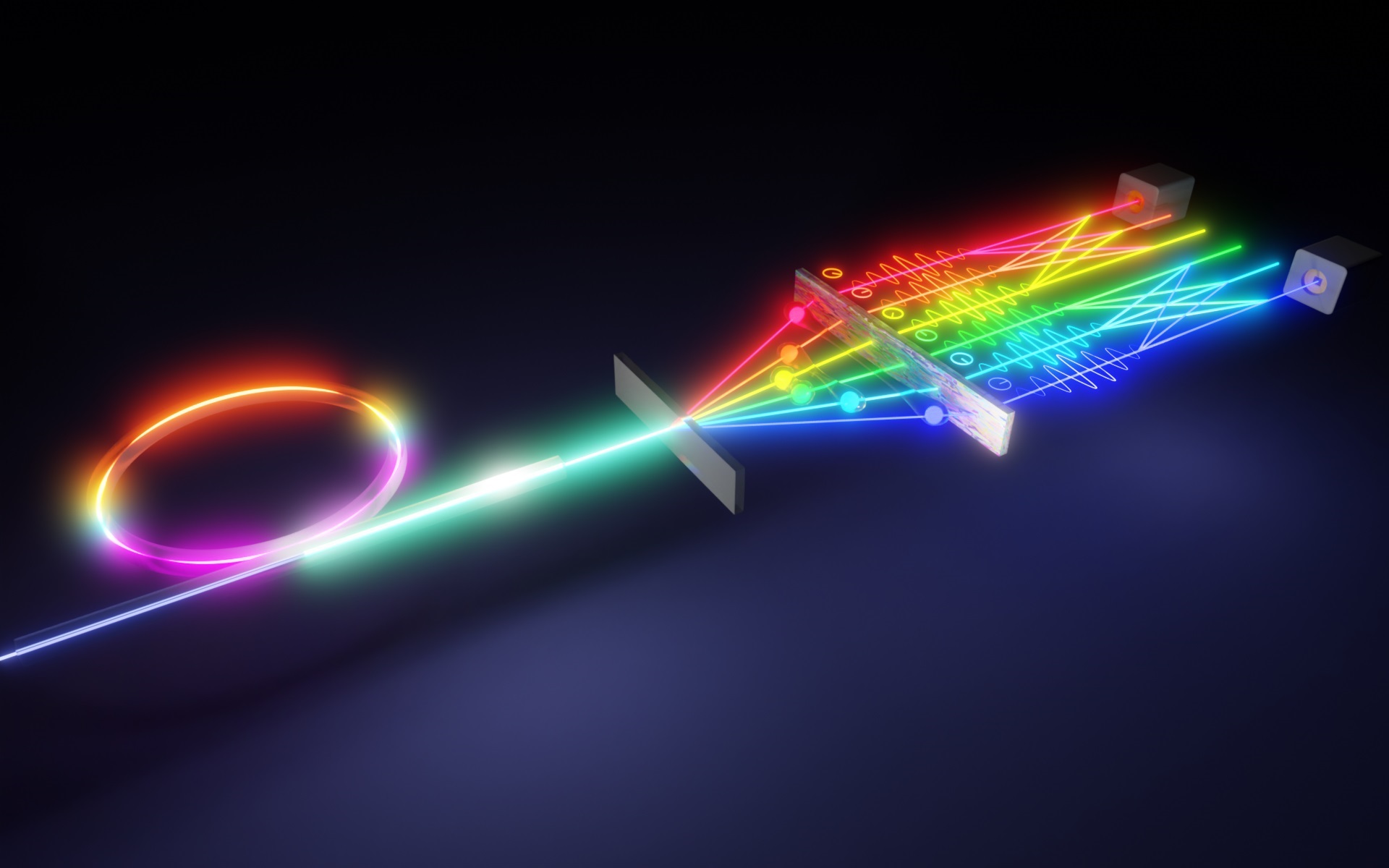

The team’s ultrathin lens is part of a class of engineered objects known as metasurfaces. Metasurfaces are 2-D analogs of metamaterials, which are manufactured materials with physical and chemical properties not normally found in nature. A metasurface-based lens — or metalens — consists of flat microscopically patterned material surfaces designed to interact with lightwaves. To date, images taken with metalenses yield clear images — at best — for only small slices of the visual spectrum. But the UW team’s metalens — in conjunction with computational filtering — yields full-color images with very low levels of aberrations across the visual spectrum.

“Our approach combines the best aspects of metalenses with computational imaging — enabling us, for the first time, to produce full-color images with high efficiency,” said senior author Arka Majumdar, a UW assistant professor of physics and electrical engineering.

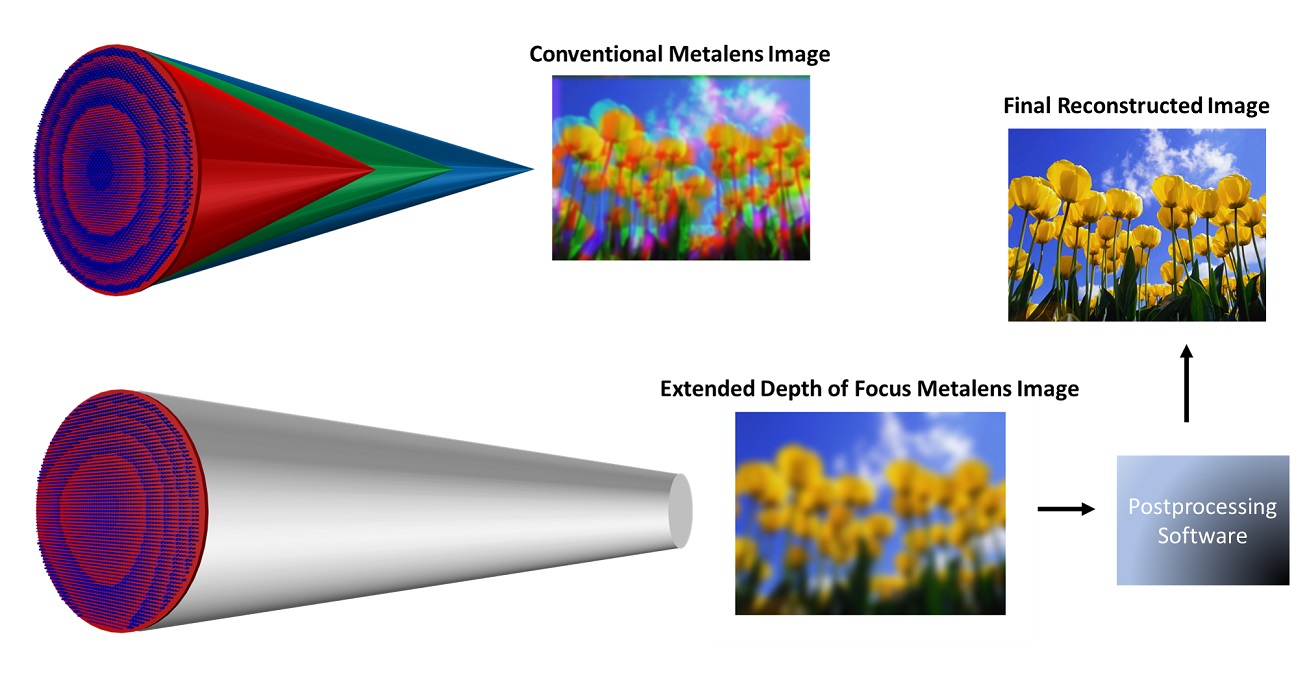

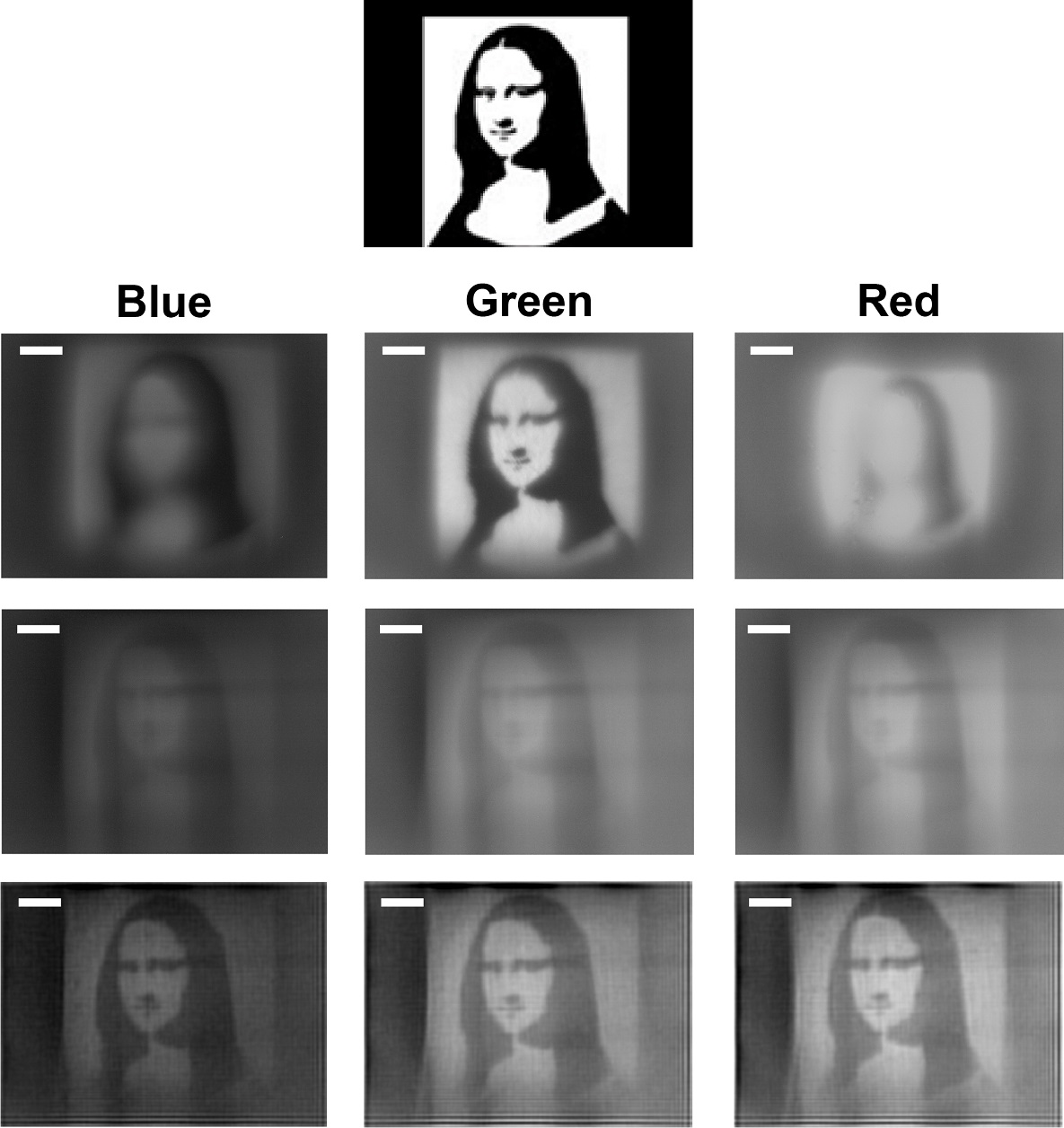

The UW team’s metalens consists of arrays of tiny pillars of silicon nitride on glass which affect how light interacts with the surface. Depending on the size and arrangement of these pillars, microscopic lenses with different properties can be designed. A traditional metalens (top) exhibits shifts in focal length for different wavelengths of light, producing images with severe color blur. The UW team’s modified metalens design (bottom), however, interacts with different wavelengths in the same manner, generating uniformly blurry images which enable simple and fast software correction to recover sharp and in-focus images. Shane Colburn/Alan Zhan/Arka Majumdar

Instead of manufactured glass or silicone, metalenses consist of repeated arrays of nanometer-scale structures, such as columns or fins. If properly laid out at these minuscule scales, these structures can interact with individual lightwaves with precision that traditional lenses cannot. Since metalenses are also so small and thin, they take up much less room than the bulky lenses of cameras and high-resolution microscopes. Metalenses are manufactured by the same type of semiconductor fabrication process that is used to make computer chips.

“Metalenses are potentially valuable tools in optical imaging since they can be designed and constructed to perform well for a given wavelength of light,” said lead author Shane Colburn, a UW doctoral student in electrical engineering. “But that has also been their drawback: Each type of metalens only works best within a narrow wavelength range.”

In experiments producing images with metalenses, the optimal wavelength range so far has been very narrow: at best around 60 nanometers wide with high efficiency. But the visual spectrum is 300 nanometers wide.

Today’s metalenses typically produce accurate images within their narrow optimal range — such as an all-green image or an all-red image. For scenes that include colors outside of that optimal range, the images appear blurry, with poor resolution and other defects known as “chromatic aberrations.” For a rose in a blue vase, a red-optimized metalens might pick up the rose’s red petals with few aberrations, but the green stem and blue vase would be unresolved blotches — with high levels of chromatic aberrations.

The UW team’s metalens, coupled with computational processing, can capture images for a variety of light wavelengths with very low levels of chromatic aberrations. For this black-and-white image of the Mona Lisa (at top), the first row shows how well a green-optimized metalens captures the image for green light, but causes severe blurring for blue and red wavelengths. The UW team’s improved metalens (second row) captures images with similar types of aberrations for blue, green and red wavelengths, showing uniform blurring across wavelengths. But computational filtering removes most of these aberrations, as shown in the bottom row, which is a substantial improvement over a traditional metalens (first row), which is only in focus for green light and is unintelligible for blue and red. Shane Colburn/Alan Zhan/Arka Majumdar

Majumdar and his team hypothesized that, if a single metalens could produce a consistent type of visual aberration in an image across all visible wavelengths, then they could resolve the aberrations for all wavelengths afterward using computational filtering algorithms. For the rose in the blue vase, this type of metalens would capture an image of the red rose, blue vase and green stem all with similar types of chromatic aberrations, which could be tackled later using computational filtering.

They engineered and constructed a metalens whose surface was covered by tiny, nanometers-wide columns of silicon nitride. These columns were small enough to diffract light across the entire visual spectrum, which encompasses wavelengths ranging from 400 to 700 nanometers.

Critically, the researchers designed the arrangement and size of the silicon nitride columns in the metalens so that it would exhibit a “spectrally invariant point spread function.” Essentially, this feature ensures that — for the entire visual spectrum — the image would contain aberrations that can be described by the same type of mathematical formula. Since this formula would be the same regardless of the wavelength of light, the researchers could apply the same type of computational processing to “correct” the aberrations.

They then built a prototype metalens based on their design and tested how well the metalens performed when coupled with computational processing. One standard measure of image quality is “structural similarity” — a metric that describes how well two images of the same scene share luminosity, structure and contrast. The higher the chromatic aberrations in one image, the lower the structural similarity it will have with the other image. The UW team found that when they used a conventional metalens, they achieved a structural similarity of 74.8 percent when comparing red and blue images of the same pattern; however, when using their new metalens design and computational processing, the structural similarity rose to 95.6 percent. Yet the total thickness of their imaging system is 200 micrometers, which is about 2,000 times thinner than current cellphone cameras.

“This is a substantial improvement in metalens performance for full-color imaging — particularly for eliminating chromatic aberrations,” said co-author Alan Zhan, a UW doctoral student in physics.

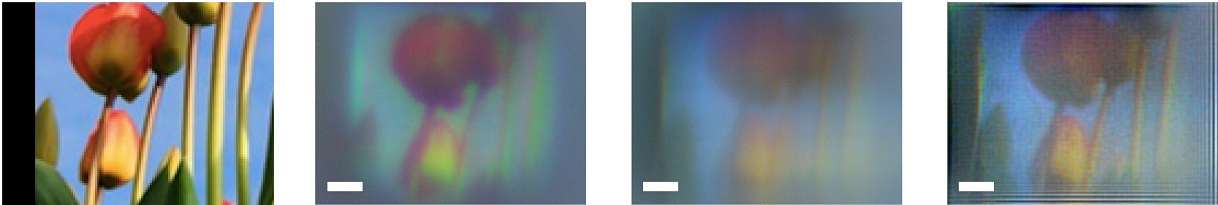

For the color image of flower buds at the far-left, a traditional metalens (second from left) captures images with strong chromatic aberrations and blurring. The UW team’s modified metalens (third from left) yields an image with similar levels of blurring for all colors. But the team removes most of these aberrations using computational filtering, producing an image (right) with high structural similarity to the original. Shane Colburn/Alan Zhan/Arka Majumdar

In addition, unlike many other metasurface-based imaging systems, the UW team’s approach isn’t affected by the polarization state of light — which refers to the orientation of the electric field in the 3-D space that lightwaves are traveling in.

The team said that its method should serve as a road map toward making a metalens — and designing additional computational processing steps — that can capture light more effectively, as well as sharpen contrast and improve resolution. That may bring tiny, next-generation imaging systems within reach.

The research was funded by the UW, an Intel Early Career Faculty Award and an Amazon Catalyst Award.