August 19, 2014

Krista Weidner

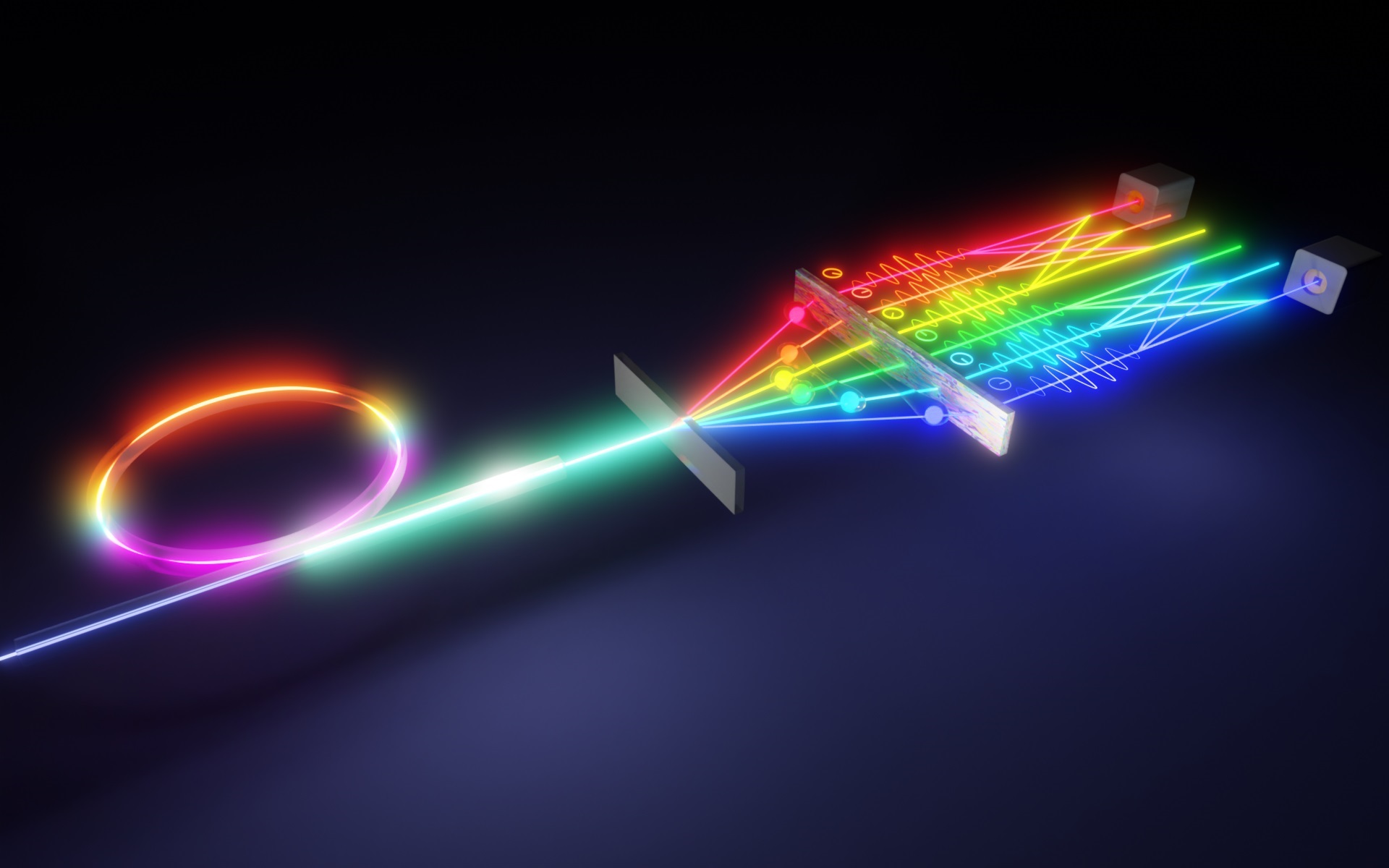

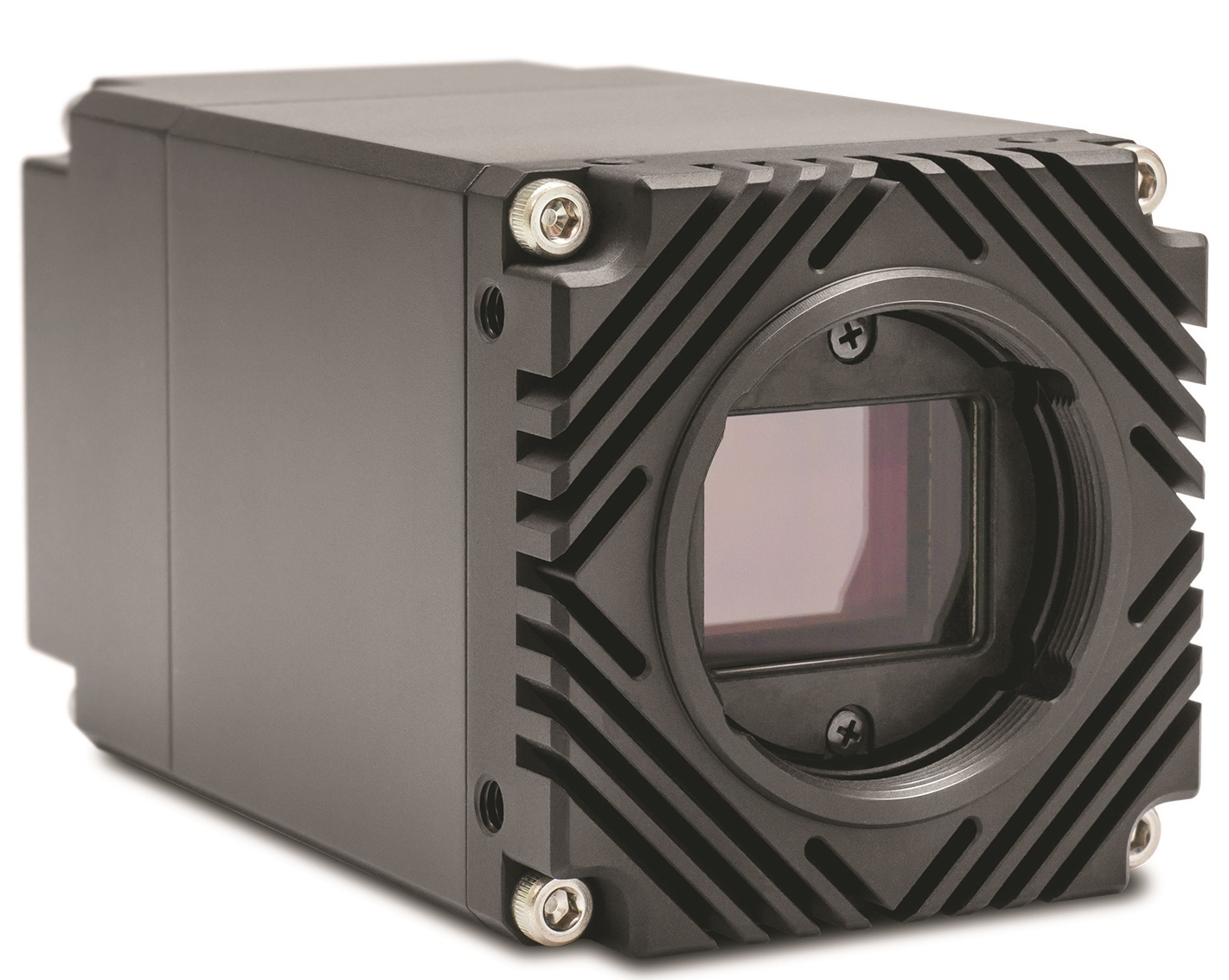

UNIVERSITY PARK, Pa. -- “Deep learning” is the task at hand forVijaykrishnan Narayanan and his multidisciplinary team of researchers who are developing computerized vision systems that can match--and even surpass--the capabilities of human vision. Narayanan, professor of computer science and engineering and electrical engineering, and his team received a $10 million Expeditions in Computing award from the National Science Foundation (NSF) last fall to enhance the ability of computers to not only record imagery, but to actually understand what they are seeing—a concept that Narayanan calls "deep learning."

The human brain performs complex tasks we don’t realize when it comes to processing what we see, says Narayanan, leader of the Expeditions in Computing research team. To offer an example of how the human brain can put images into context, he gestures toward a photographer in the room. "Even though I haven't seen this person in a while and his camera is partially obscuring his face, I know who he is. My brain fills in the gaps based on past experience. Our research goal, then, is to develop computer systems that perceive the world similar to the way a human being does."

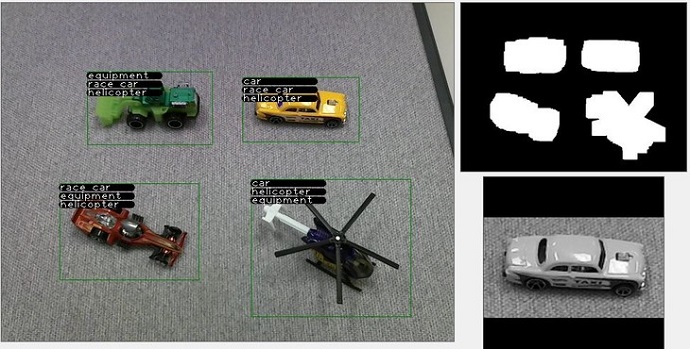

Some types of computerized vision systems aren't new. Many digital cameras, for example, can detect human faces and put them into focus. But smart cameras don't take into account complex, cluttered environments. That’s part of the team's research goal: to help computerized systems understand and interact with their surroundings and intelligently analyze complex scenes to build a context of what’s going on around them. For example, the presence of a computer monitor suggests there’s most likely a keyboard nearby and a mouse next to that keyboard.

Narayanan offered another example: "Many facilities have security camera systems in place that can identify people. The camera can see that I am Vijay. What happens if I bring a child with me? The obvious assumption would be, this child has been seen with Vijay so many times, it must be his son. But let's consider the context. What if this security camera is at the YMCA? The YMCA is usually a place that has mentoring programs, so this child might not be my son; in fact, he might be my neighbor. So we're working on holistic systems that can incorporate all kinds of information and build a context of what's happening by processing what’s in the scene.”

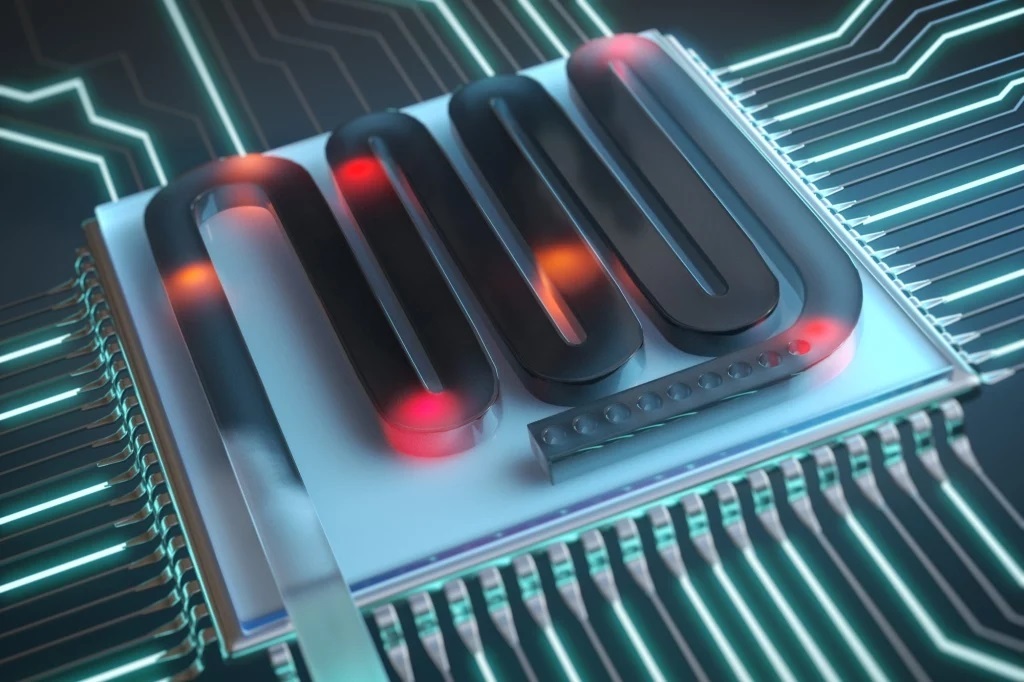

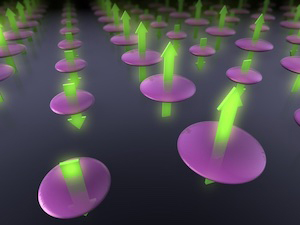

Another research goal is to develop these machine vision systems that can process information efficiently, using minimal power. Most current machine vision systems use a lot of power and are designed for one specific application (such as the face recognition feature found in many digital cameras). The researchers want to build low-power devices that can replicate the efficiency of the human visual cortex, which can make sense out of cluttered environments and complete a range of visual tasks using less than 20 watts of power.

Narayanan and other team members are looking at several scenarios for practical applications of smart visual systems, including helping visually impaired people with grocery shopping. He and colleagues Mary Beth Rosson and John Carroll, professors of information sciences and technology and co-directors of the Computer-Supported Collaboration and Learning Lab, are exploring how artificial vision systems can interact and help the visually impaired. "We'll be working with collaborators from the Sight Loss Support Group of Centre County to better understand the practices and experiences of visual impairment, and to design mockups, prototypes, and eventually applications to support them in novel and appropriate ways," Carroll said.

Another research priority is using smart visual systems is to enhance driver safety. Distracted driving is the cause of more than a quarter million injuries each year, so a device that could warn distracted drivers when they have taken their eyes off the road for too long could greatly reduce serious accidents. These systems would also help draw drivers' attention to objects or movements in the environment they might otherwise miss.

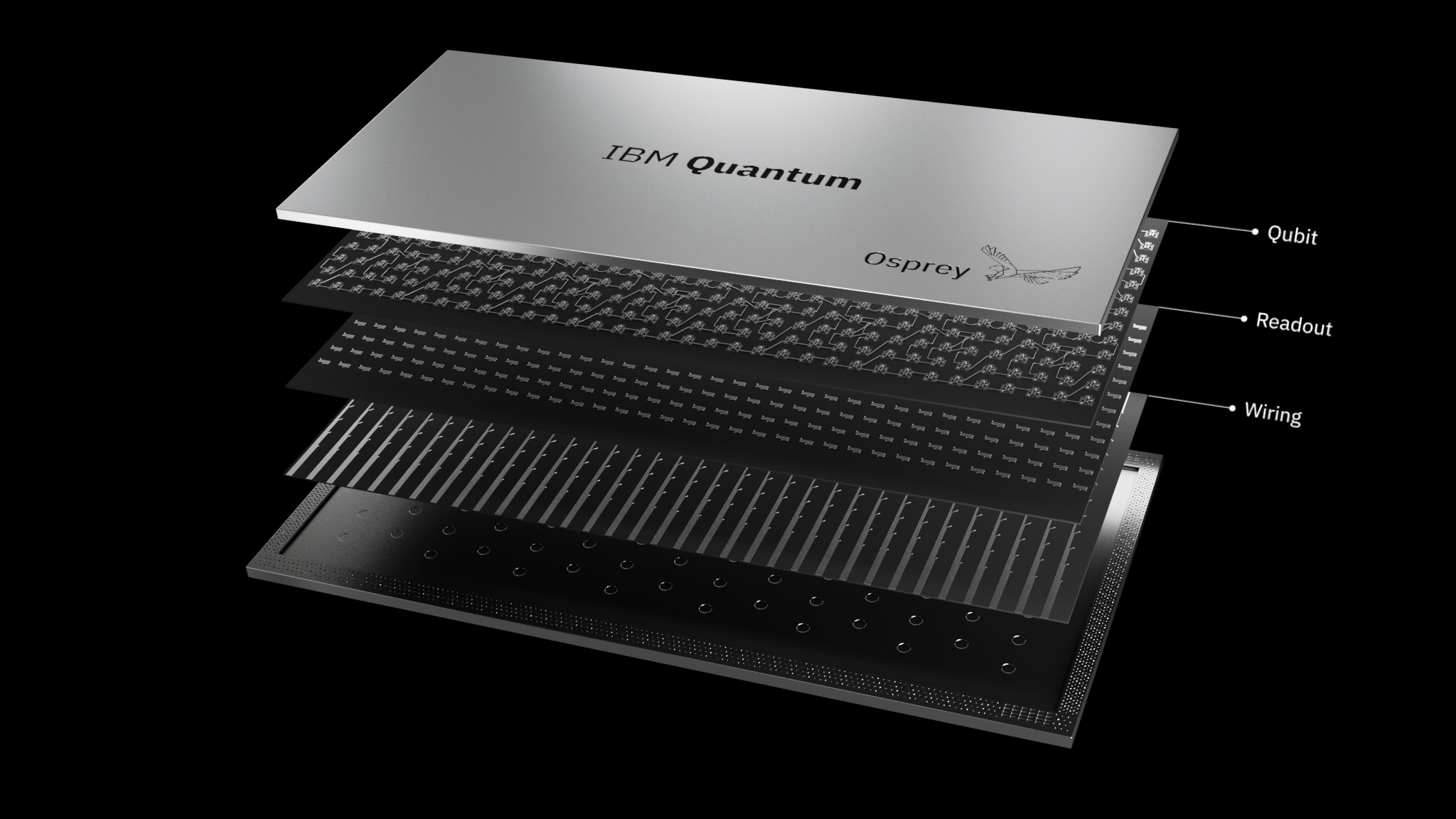

A significant aspect of the research is to redesign computer chips that are the building blocks for smart visual systems. "To develop a typical computer chip, you give it a series of commands: 'Get this number, get this number, add the two numbers, store the number in this space,'" he says. "We're working on optimizing these commands so that we don't need to keep repeating them. We want to reduce the cost of fetching instructions, which will increase efficiency."

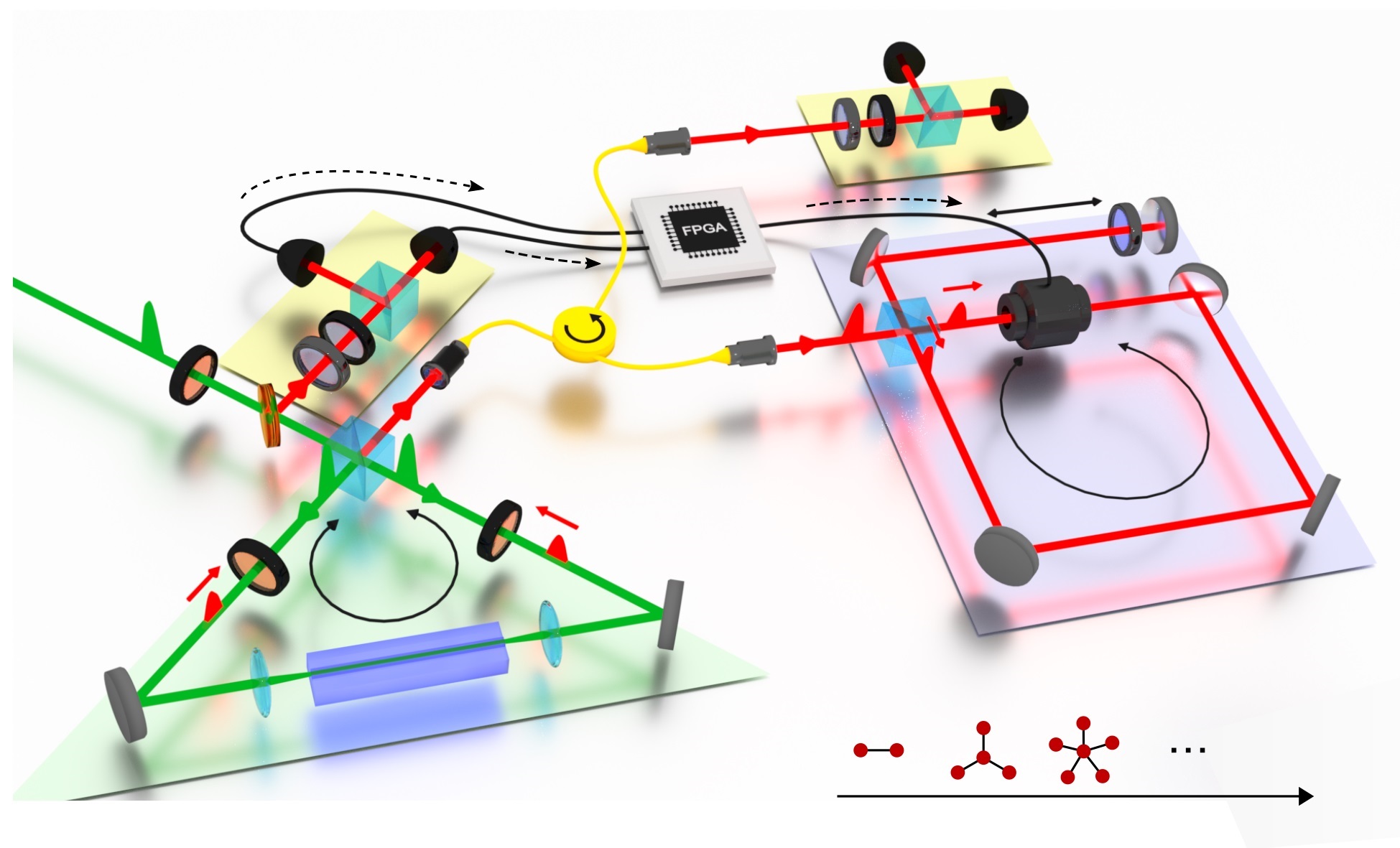

Research team members from Penn State include Rosson and Carroll as well as Chita Das, distinguished professor of computer science and engineering; Dan Kifer, associate professor of computer science and engineering; Suman Datta, professor of electrical engineering; and Lee Giles, professor of information sciences and technology. The Penn State group collaborates with researchers from seven other universities as well as with national labs, nonprofit organizations, and industry.

The research team has had several project-wide electronic meetings since January 2014. "We have a lot of synergies and technical exchanges, so we all know what everyone is doing and we’ve made some exciting progress," Narayanan said. "We have geographically and professionally diverse groups of people working really well together to meet this challenge."