August 1, 2013

By Bjorn Carey

Some mathematical simulations used to predict the outcomes of real events are so complex that they'll stump even today's top supercomputers. To incubate the next generation of supercomputers for tackling real-world problems, the National Nuclear Security Administration (NNSA) has selected Stanford as one of its three new Multidisciplinary Simulation Centers.

The Stanford effort, under the leadership of Gianluca Iaccarino, an associate professor of Mechanical Engineering and the Institute for Computational Mathematical Engineering, will receive $3.2 million per year for the next five years under the NNSA's Predictive Science Academic Alliance Program II (PSAAP II). The University of Utah and the University of Illinois-Urbana-Champaign were selected to house the other two centers.

Participants in PSAAP II will devise new computing paradigms within the context of solving a practical engineering problem. The Stanford project will work on predicting the efficiency of a relatively untested and poorly understood method of harvesting solar energy.

The project will draw on expertise from the Mechanical Engineering, Aeronautics and Astronautics, Computer Science and Math departments.

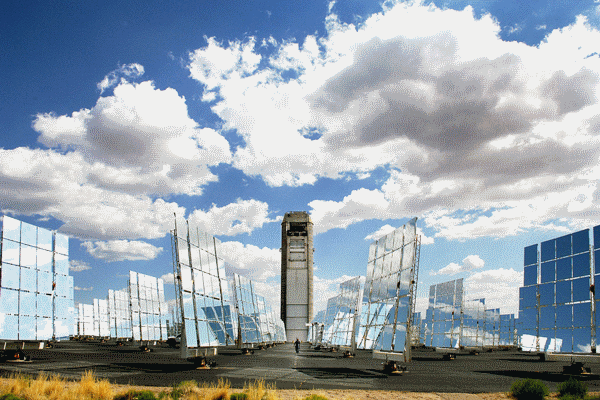

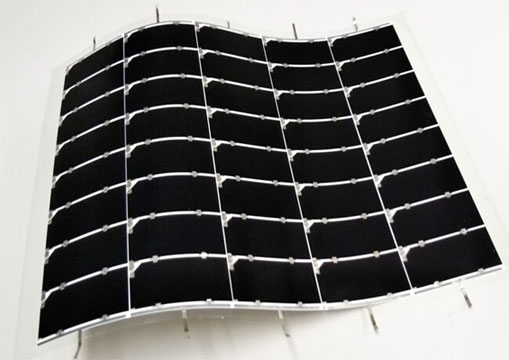

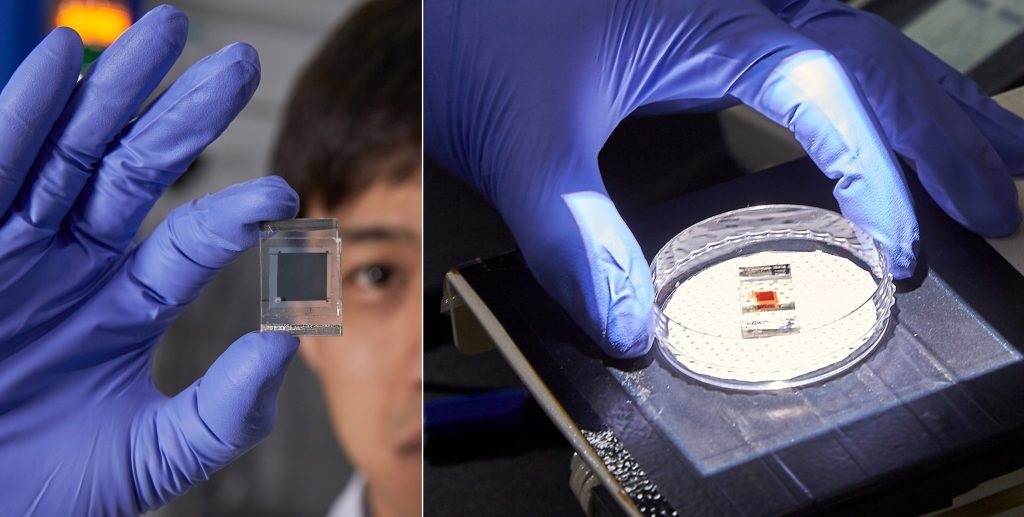

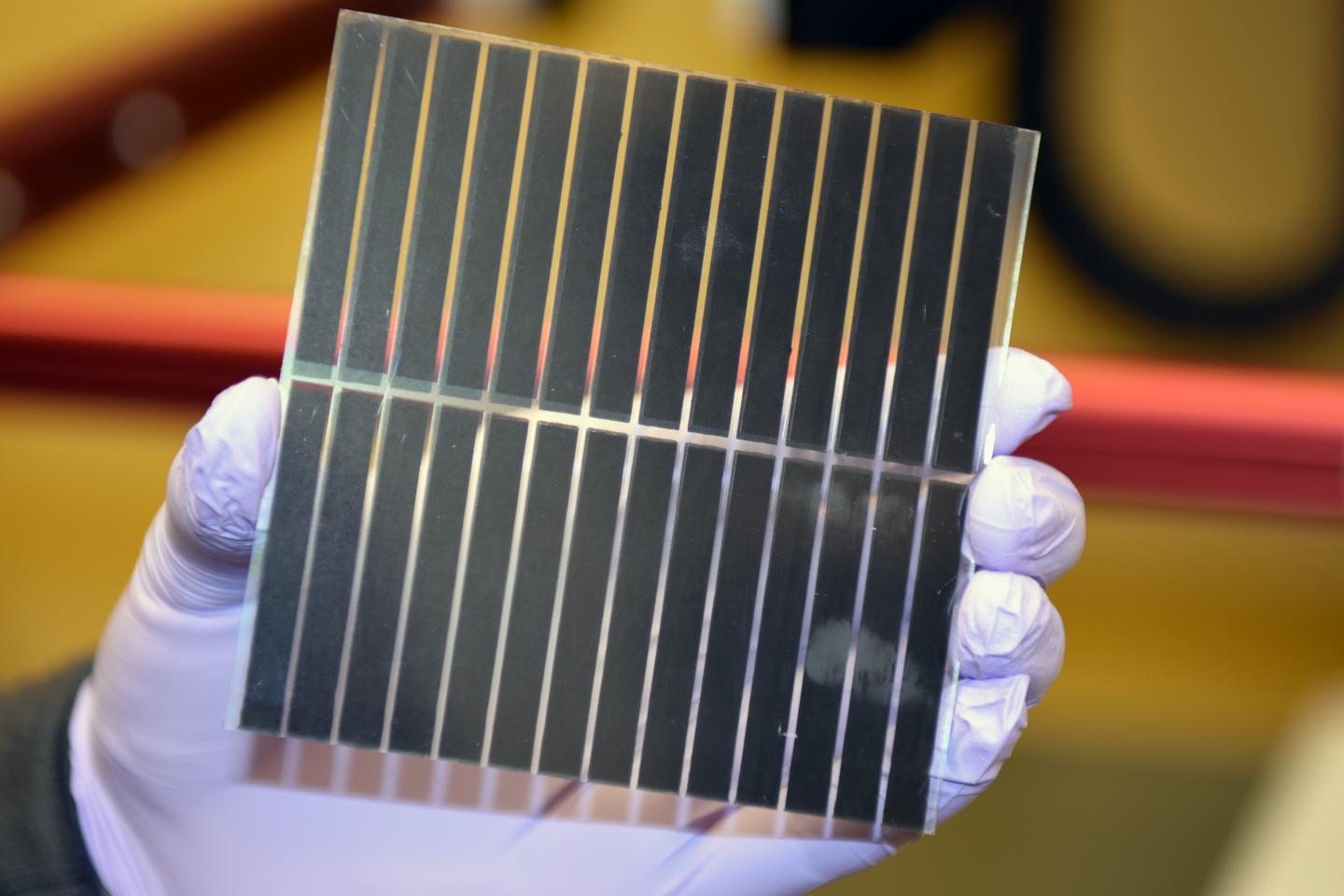

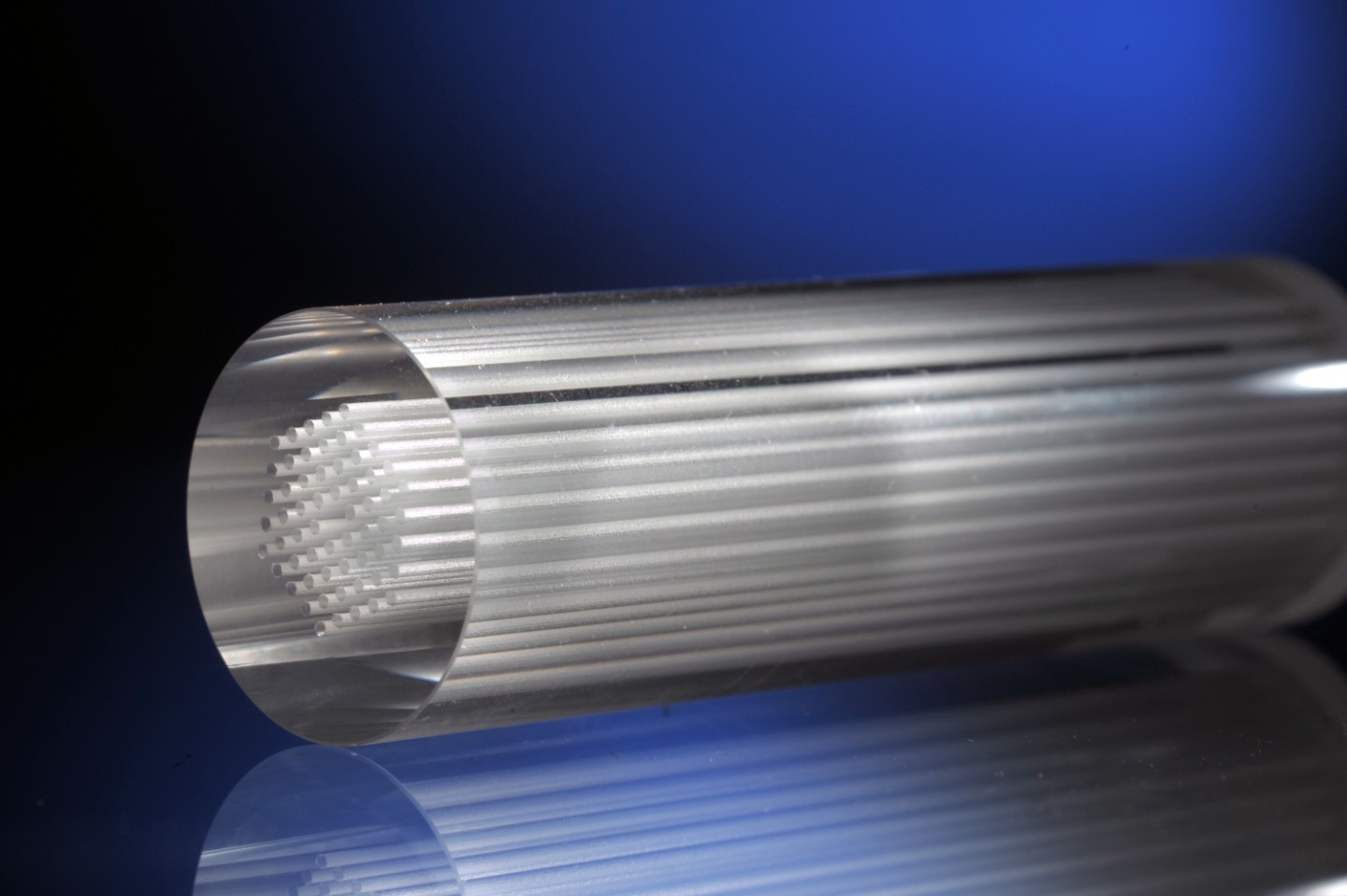

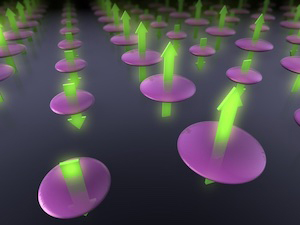

Traditional solar-thermal systems use mirrors to concentrate solar radiation on a solid surface and transfer energy to a fluid, the first step toward generating electricity. In the proposed system, fine particles suspended within the fluid would absorb sunlight and directly transfer the heat evenly throughout the fluid. This technique would allow for higher energy absorption and transfer rates, which would ultimately increase the efficiency of the overall system.

A critical aspect of assessing this technique involves predicting uncertainty within the system. For example, the alignment of the mirrors is imprecise and the suspended particles aren't of uniform size. "We need to rigorously assess the impacts of these sensitivities to be able to compute the efficiency of a system like this," Iaccarino said. "There is currently no supercomputer in the world that can do this, and no physical model."

In order to crack this problem, Stanford and the other program participants will need to address the other part of the NNSA's directive: develop programming environments and computational approaches that target an exascale computer, a computer that is 1,000 times faster than today's fastest supercomputers, by 2018. The overall task presents several challenges.

"The supercomputer paradigm has reached a physical apex," Iaccarino said. "Energy consumption is too high, the computers get too hot, and it's too expensive to compute with millions of commodity computers bundled together. Next generation supercomputers will have completely different architectures."

A particular design challenge facing engineers is that just one faulty processing unit will halt a simulation. An exascale computer consisting of millions of units will be prone to frequent failure, and so scientists will need to design intelligent backup systems that will allow the simulation to continue running even when one portion of the computer has crashed.

The ambiguity surrounding exascale architecture complicates matters on the software development end: Iaccarino and his colleagues will essentially have to program blind for such a system. The programing models they develop will need to have enough flexibility to work on whatever computer model emerges as viable in 2018.

Stanford is particularly well suited to handling the task, Iaccarino said.

"Fifteen years ago, the Computer Science Department and the Mechanical Engineering Department joined forces to embrace massively parallel computing in solving challenging multi-physics engineering problems," he said.

That relationship, Iaccarino said, contributed to the NNSA continuing its 15-year collaboration with the university; Stanford is the only university to receive funding in every round since the program's inception.

In concert with the simulation work, the researchers will operate a physical experiment of the solar collector to test the predictions and identify other critical sensitivities. Beyond the lab, Iaccarino said that the overall effort will spur new graduate-level courses and workshops dedicated to exploring the intersection of computational science and engineering.

Stanford will also collaborate with five universities on the project: the University of Michigan, the University of Minnesota, the University of Colorado-Boulder, the University of Texas-Austin and the State University of New York-Stony Brook.

For more Stanford experts on engineering, computer science and other topics, visit Stanford Experts.