San Diego, Calif., Dec. 16, 2016 -- By combining 3D curved fiber bundles with spherical optics, photonics researchers at the University of California San Diego have developed a compact, 125 megapixel per frame, 360° >video camera that is useful for immersive virtual reality content.

The work, led by electrical engineering professor Joseph Ford at the UC San Diego Jacobs School of Engineering, was presented at the SPIE Defense + Commercial Sensing 2016 Conference.

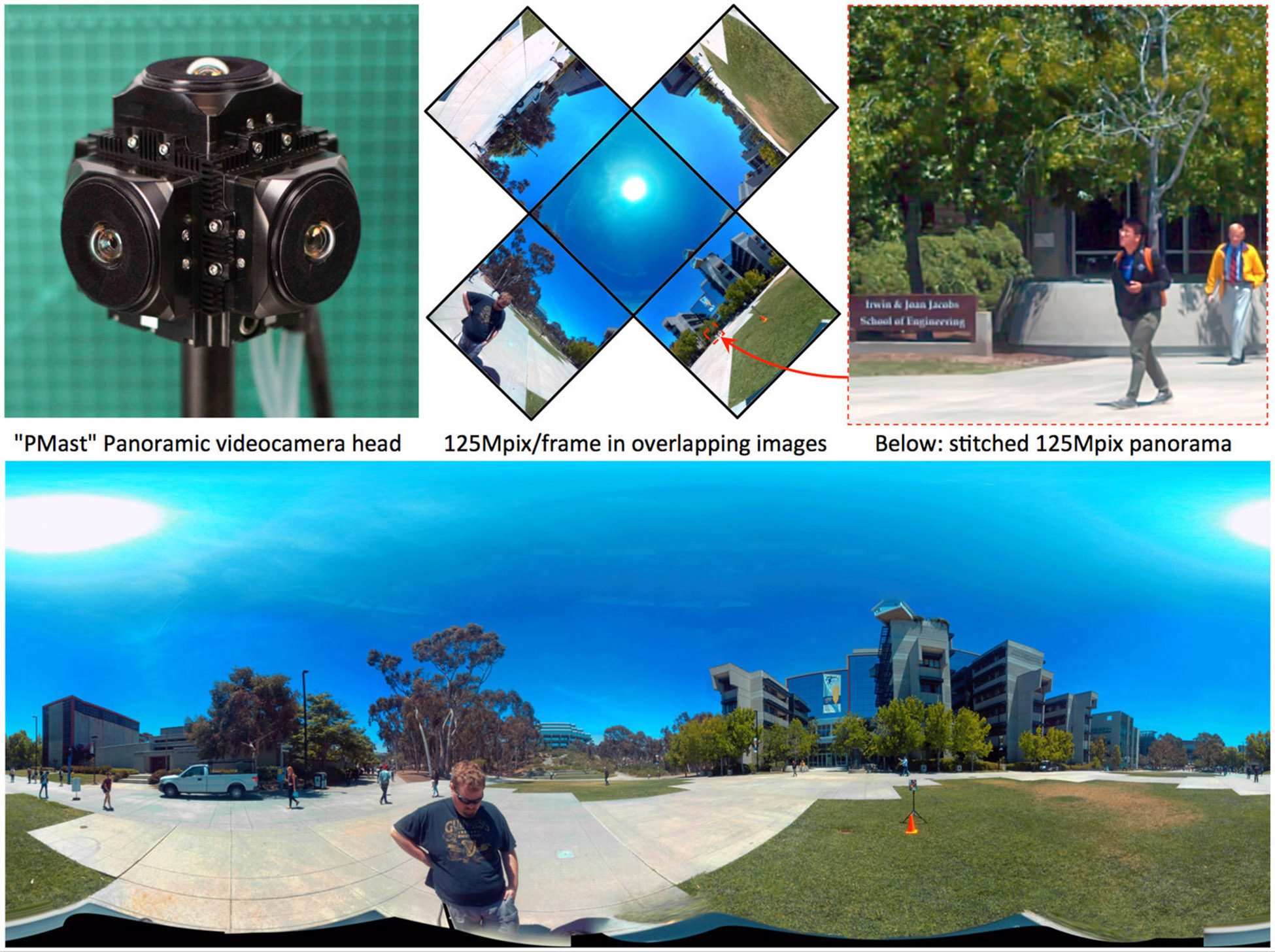

The panoramic "photonic mast" (PMast) video imager can capture cinematographic-grade immersive video content without the obtrusive bulk of more conventional multicamera rigs. "The advantage of the research prototype is its small size compared to bulky conventional lenses required by DSLR and cinematic videocameras, and its high resolution and light collection relative to small systems like the GoPro camera arrays," Ford said.

Photograph of the photonic mast (PMast) head (top left). Five discrete 25 megapixel images from it are shown (top center), along with a zoomed-in portion of one pixel (top right) and the total stitched panorama (bottom). Image courtesy of UC San Diego Photonic Systems Integration Laboratory

Although compact 360° cameras can be made from an array of small-aperture smartphone imagers, their small (typically 1.1 μm) pixels provide low dynamic range. And while digital single-lens-reflex and cinematographic imagers have 4–8 μm pixels, they require correspondingly longer focal length lenses, which are bulky and have low light collection (typically F/2.8 to F/4, where F is the focal length divided by the lens aperture).

The panoramic PMast video imager has the large-pixel sensors and relatively long focal lengths needed for high dynamic range and resolution, Ford explained.

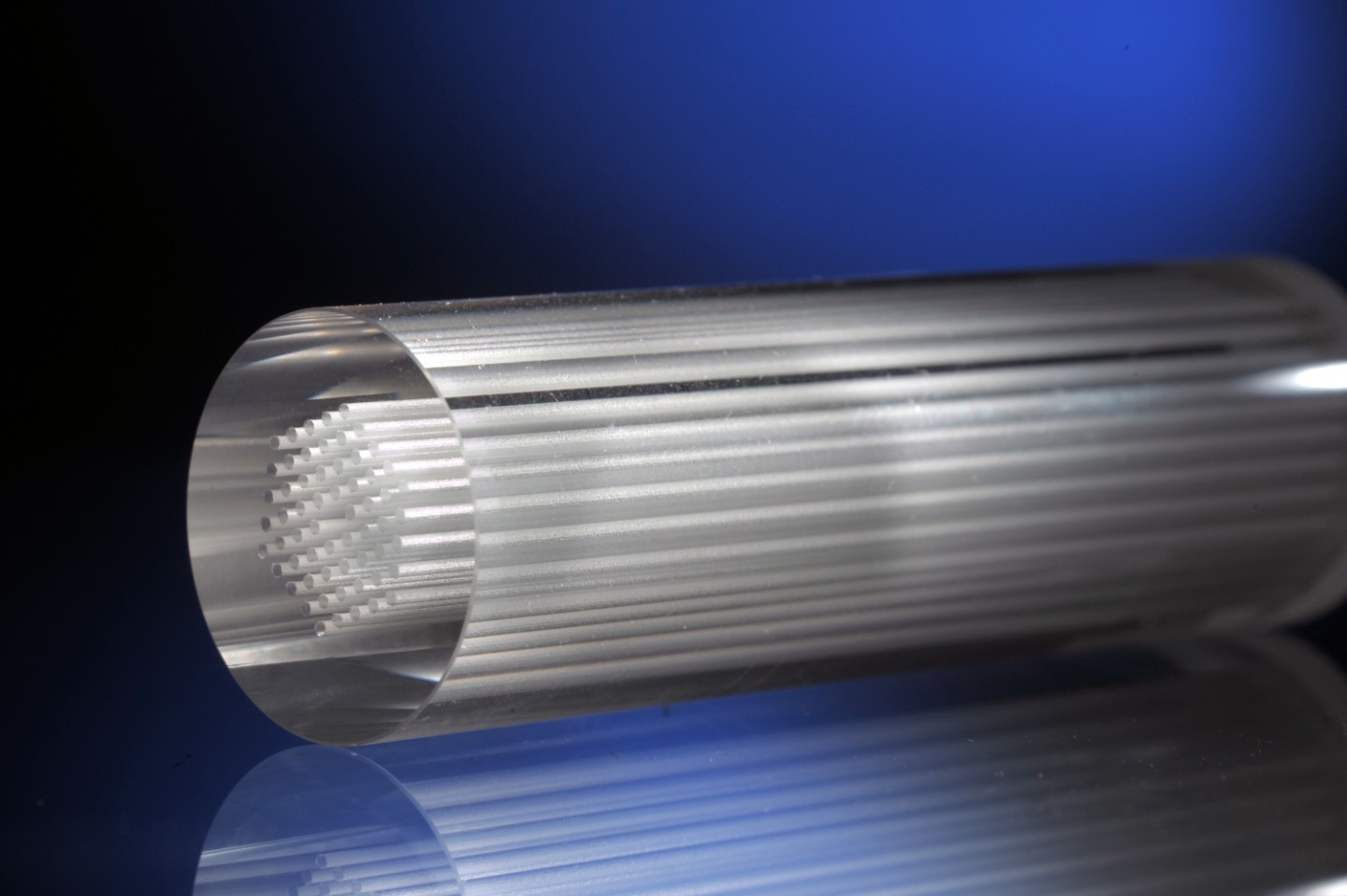

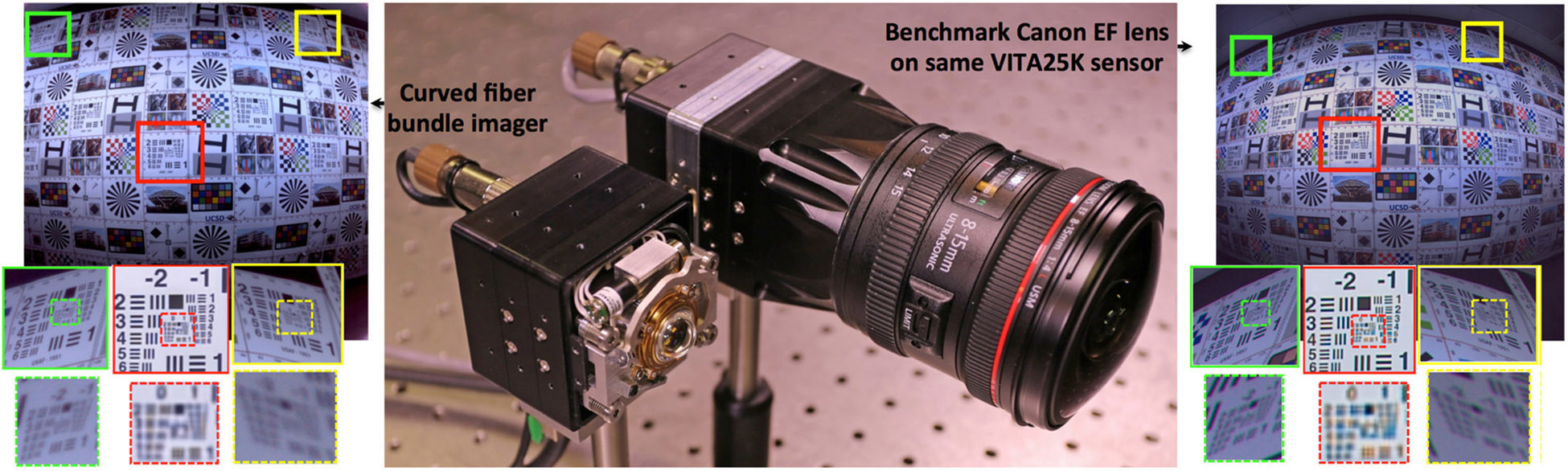

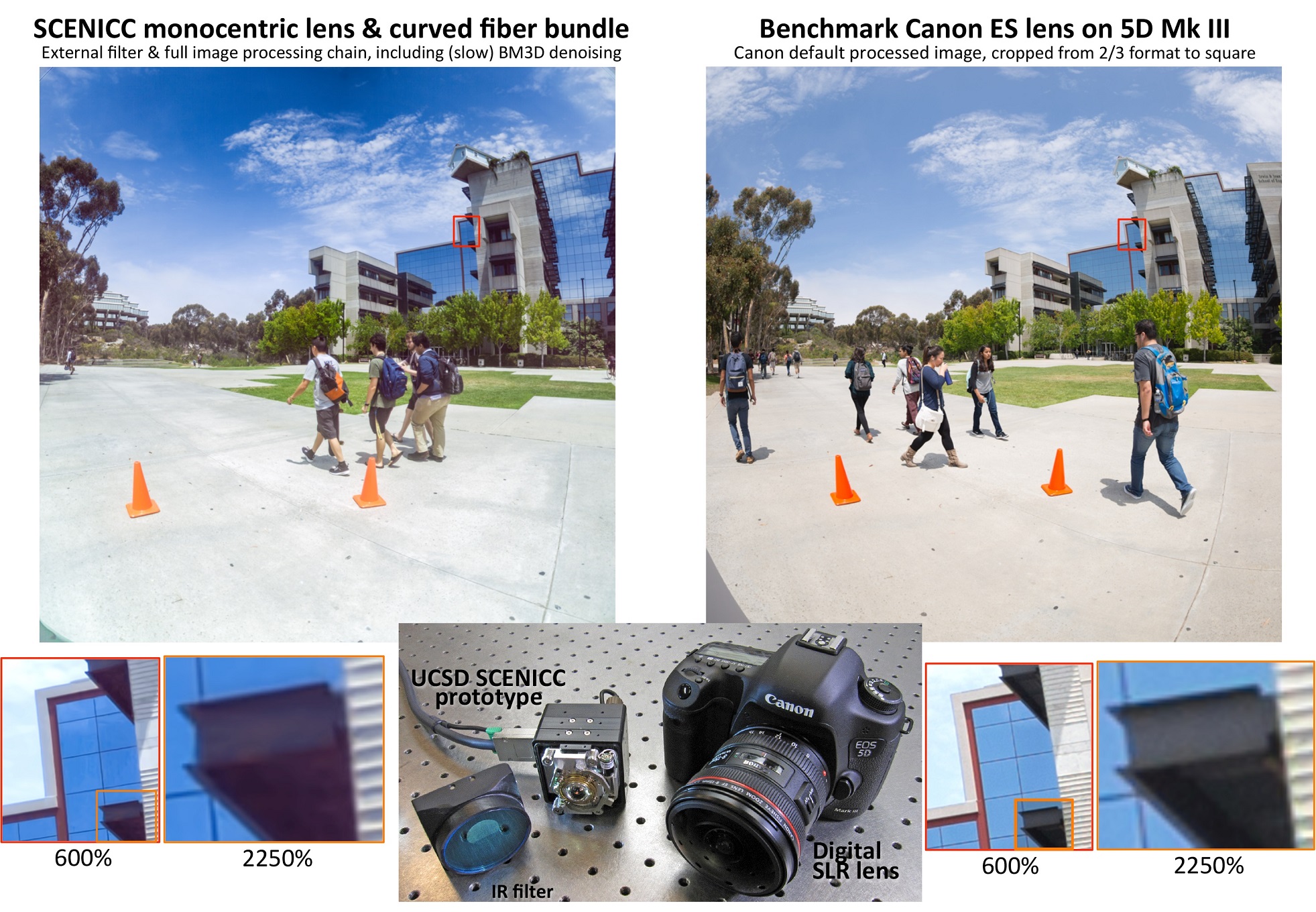

Center: Photograph of the 126° field curved fiber bundle imager integrated with a 25 megapixel sensor, next to a conventional lens on the same sensor. Example images obtained from both setups are shown (on the left and right). The smaller F/1.35 (i.e., curved fiber bundle) monocentric lens causes less image distortion and collects more light (left) than the F/4 conventional lens (right). Image courtesy of UC San Diego Photonic Systems Integration Laboratory

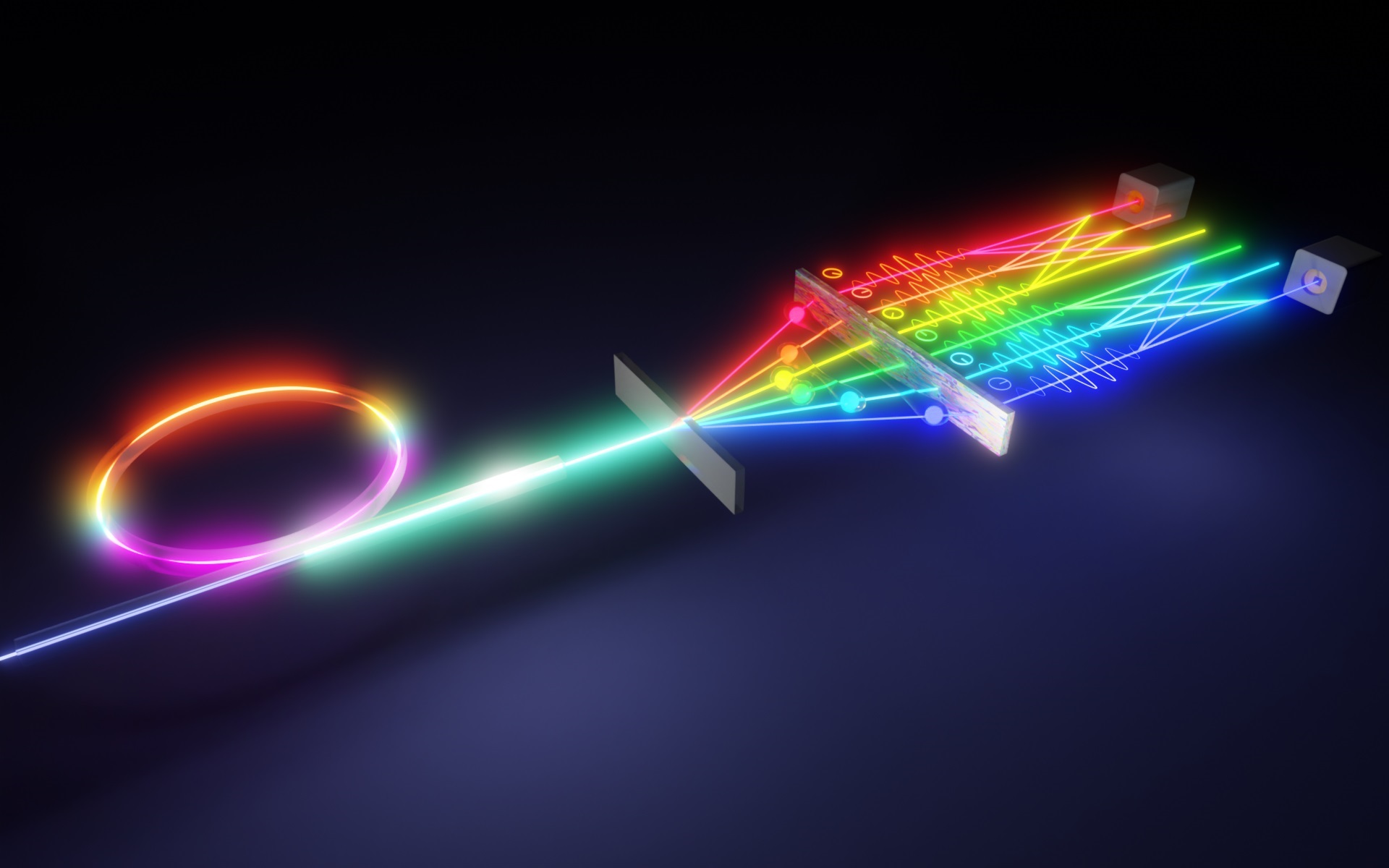

To develop their new system, researchers in Ford's Photonic Systems Integration Laboratory at UC San Diego used an approach to panoramic imaging called "monocentric optics", where all surfaces—including the image surface—are concentric hemispheres. The symmetry of these lenses means that lateral color and off-axis aberrations (astigmatism and coma) are eliminated. In addition, the simple lens structures can be used to correct for spherical and axial color aberrations to yield extraordinarily wide angle resolution and light collection. The image that is produced can be coupled to a conventional focal plane, via a fiber bundle faceplate (with a curved input and flat output face). Fiber faceplates are solid glass elements made of small, high-index optical fibers separated by a thin, low-index cladding, used for nonimaging transfer of light between the input and output faces.

SCENICC Imagers Compared to Fisheye Lens on DSLR

The PMast panoramic videocamera head consists of five identical 25 megapixel imagers, connected by a multimode fiber-optic ribbon cable to a rack of five servers to process and store the video stream (each with 1 TB of solid-state memory). The power (approximately 60 Watts) generated by the imagers when they are running at full resolution and 24 frames per second is dissipated by water cooling. The lenses for this imager are slightly modified (from previous lenses developed in Ford's lab) with a F/2.5 aperture; a fixed protective dome; and a removable IR/color/apodization filter.

More details on the PMast panoramic video imaging system are described in a SPIE Newsbrief article.

Members of the research team are Joseph Ford, Salman Karbasi, Ilya Agurok, Igor Stamenov, Glenn Schuster, Nojan Motamedi, Ash Arianpour and William Mellette of UC San Diego; and Adam Johnson, Ryan Tennill, Rick Morrison and Ron Stack of Distant Focus Corporation, Champaign, Illinois.

The research was conducted under the Defense Advanced Research Projects Agency (DARPA) SCENICC (Soldier Centric Imaging via Computational Cameras) program.